The Best quotes from ai-PULSE 2023

The first edition of AI conference ai-PULSE was one to be remembered. Here’s a first sweep of the most headline-worthy quotes!

Artificial intelligence took major leaps forward at Station F on November 17, setting trends which are bound to leave their mark on 2024 and beyond. Let’s discover a few…

“Open source” was probably the most-heard term throughout the day. The AI leaders gathered at ai-PULSE all expressed a marked desire to go against the opaque ways of companies like OpenAI, Google or Microsoft.

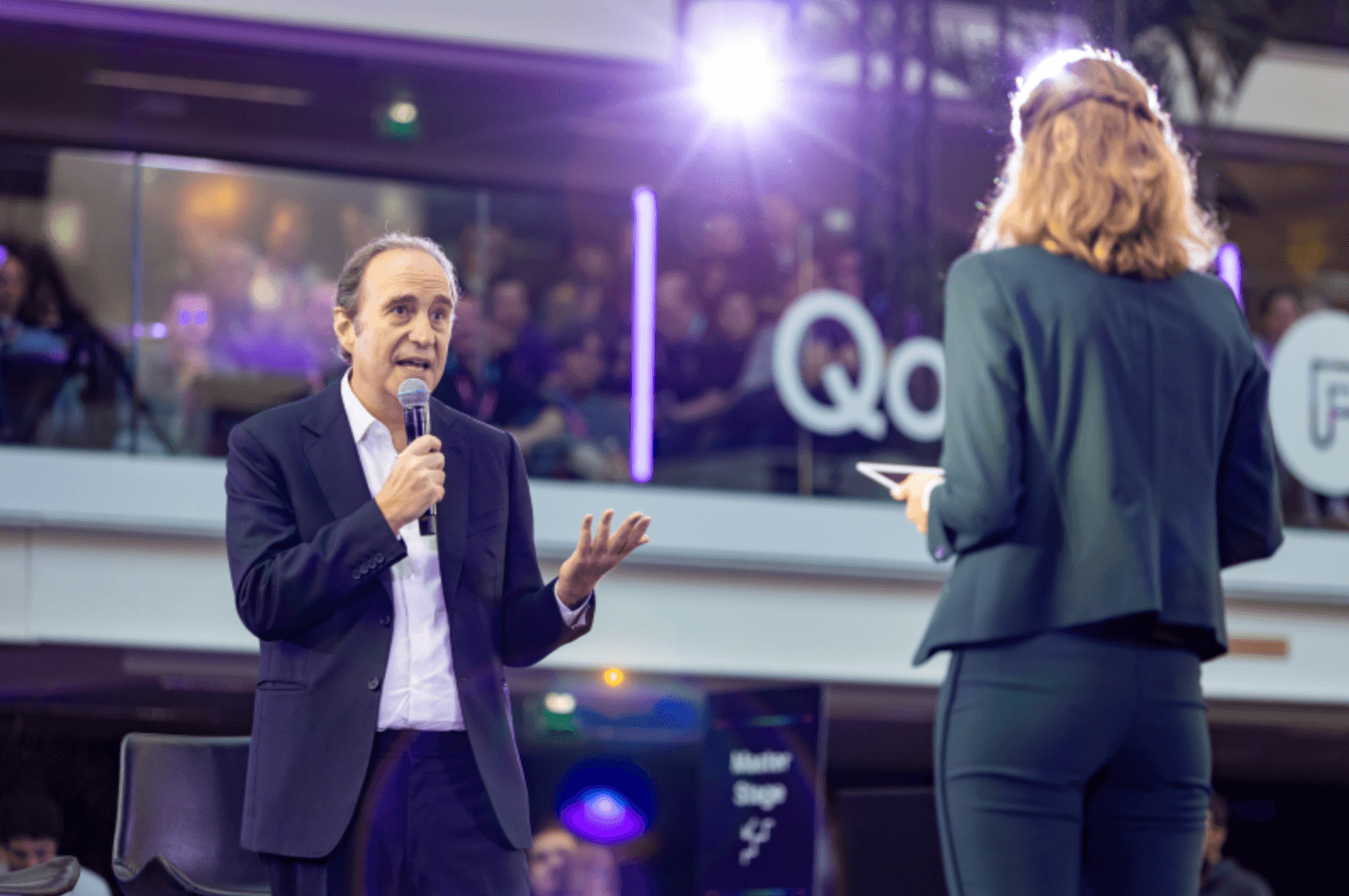

“We’re publishing all this in open science,” said iliad Group Founder Xavier Niel at the press conference launching new AI lab Kyutai. “That’s something GAFAM are less and less tolerant of. And yet scientists need to publish”.

This also implies that anyone can use the results of Kyutai’s work… “even AWS!” said Niel. “It’s about moving the world forward in a positive way. Do we want our children to use things not created in Europe? No. This is how we create things that meet our needs better.”

The one GAFAM exception to this rule, of course, is Meta (the “F” stands for Facebook, of course), whose Llama2 model, presented at ai-PULSE by Meta Research Scientist Thomas Scialom, is resolutely open source.

“I’m a big fan of open source”, he said. “Without it, how would AI have made the advances it has in recent years? Open source’s ability to pull in the engagement of every startup (and other company types) keeps the ecosystem innovative… and safe, and responsible. It allows 100,000s of researchers to engage with that innovation.”

He recognized the role Hugging Face has played in the ecosystem, and how countless community contributions have improved the innovation, guardrailing, safety, and AI responsibility of models.

Given the considerable resources required by many generative AI models, those companies doing the most with the least resources are bound to come out at the top. This was another super-clear point in Arthur Mensch (Mistral AI)’s talk:

“With Mistral AI’s ‘Sliding Window Attention’ model, there are four times less tokens in memory, reducing memory pressure and therefore saving money. Currently, too much memory is used by generative AI”.

This is notably why the company’s latest model, Mistral-7B, can run locally on a (recent) smartphone, proving massive resources aren’t always necessary for AI.

So it’s a safe bet that smaller, more efficient AI models will win in 2024, particularly in the language model domain. Smartphones and edge devices will need AI models with limited resources, but which will in turn open up brand new possibilities for AI tech, in places where we hadn’t imagined it cropping up before.

We also learned at ai-PULSE that, contrary to received wisdom to date, GPUs aren’t inevitable for inference: CPUs can quite often do the trick just as well. Jeff Wittich, CPO of Ampere Computing, said that CPUs - especially those designed for cloud-native applications such as the Ampere Altra family of ARM processors - can be highly effective for AI inferencing, offering a more power-efficient and cost-effective solution compared to other hardware like GPUs.

This trend suggests a future where CPU-based inferencing becomes more prevalent, particularly for applications requiring scalable, economical, and environmentally sustainable compute solutions.

Europe’s AI Act, the first and strictest regulations to be enforced on artificial intelligence, became law early December, just two weeks after ai-PULSE. But at Station F, the debate remained very much open.

Killian Vermersch, CEO of Golem.ai, addressed the challenges of deploying AI in regulated and sensitive environments, emphasizing the need for AI systems to be transparent, explainable, and compliant with legal frameworks. This suggests a future where AI solutions are designed with a heightened focus on ethical considerations and legal compliance, particularly in fields like healthcare and national security.

Even NVIDIA’s Jensen Huang predicted that the “second wave of AI” will come with the recognition that every region and, in fact, “every country will need to build their sovereign AI, that reflects their own language and culture.” Implying that that sovereignty would have to be guaranteed by national law?

“We are more in an innovation stage than a regulation stage”, said Niel at the Kyutai press conference. “Regulation limits innovation. It’s too early for it today. The regulation that’s coming in the US comes from the big groups [current AI leaders]; it will stifle innovation. Europe is 12 months behind the US. We need to innovate first, and regulate afterwards, if it’s required. The French government has understood this. And I think Thierry Breton [the Commissioner behind the EU’s AI Act] is on the right track.”

French digital minister Jean-Noël Barrot was indeed totally aligned with Niel, affirming “we’ll defend the idea that over-regulating AI is a bad idea.”

Though for now, AI creators still seem to have totally free reign, this may end if and when the EU’s first sanctions fall. Meanwhile, the ideal balance between innovation and regulation remains to be found. “Having testing systems for ML models which are easy to integrate by data scientists are key to making the regulation compliance process as easy as possible,” said ai-PULSE exhibitor and Giskard.AI CEO Alexandre Combessie, “so that regulation is possible without slowing down innovation, but also respecting the rights of citizens.”

To be continued!

The first edition of AI conference ai-PULSE was one to be remembered. Here’s a first sweep of the most headline-worthy quotes!

How can AI remain innovative whilst complying with regulations and standards? French startup and ai-PULSE exhibitor Giskard.AI has the answer...