Discover Kubernetes Kapsule to Orchestrate your Containers

Kubernetes Kapsule is a free service, only the resources you allocate to your cluster are billed, without any extra cost.

The following features are used extensively by our advanced users. We thought you might be interested in going further and optimizing your use of Kubernetes Kapsule. All features described here are available via our REST API and via the Scaleway CLI.

Feature Gates allow you to enable some features that are still considered Alpha in Kubernetes. They provide users with the possibility of testing new Kubernetes features without waiting for them to graduate to Beta.

Admission Controllers control every request that reaches the API-Server, and grant or deny access to the cluster workers. They can limit creation, deletion, modification, or connection rights. By setting them up on your cluster, you define the behaviour of every Kubernetes object that will run on it, and the security strategies to apply, regardless of pods or containers.

For instance, you can apply the AlwaysPullImages controller to ensure that a deprecated local version of your container images is never used for your Kubernetes objects, and that images are always pulled from their registry.

There are many admission controllers available, and they all allow you to restrict usage and secure your Kubernetes environment.

Here is the following CLI command:

scw k8s cluster update xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx \ feature-gates.0=EphemeralContainers admission-plugins.0=AlwaysPullImagesYou can see the list of Admission Controllers and Feature Gates available on your current version by calling the version API or with the CLI:

scw k8s version get <version>Have a look at the complete Kubernetes documentation about Admission Controllers and at the complete Kubernetes documentation about Feature Gates.

If the Feature Gate or Admission Controller is not available, talk about enabling it with our engineers on the #k8s chan in our Slack Community.

OpenID Connect is a version of OAuth2, supported by multiple providers and services, that allows the use of the same accounts and login details. The Kubernetes documentation about authentication and OIDC explains how this authentication method works (see documentation here). Supporting it in a managed Kubernetes engine simply means that a JWT token generated by certain supported providers (or services) can be used to manage roles, login, and access your Kubernetes cluster via kubectl.

Google, Azure, Salesforce, and Github are examples of services and providers that support this feature. You can create a group in these services, with certain restricted access, and use the same login credentials to use a Kubernetes cluster. This list is not exhaustive.

To configure this feature, you can use the following command:

scw k8s cluster update xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx \ open-id-connect-config.client-id=<client-id> \ open-id-connect-config.issuer-url=<issuer-url>In order to get a more user-friendly flow, you can check out some Open Source projects like kubelogin or k8s-oidc-helper.

This plugin supports the at-rest encryption of volumes with Cryptsetup/LUKS, allowing, once again, to make your data storage more secure.

We have dedicated documentation for the encryption of persistent volumes within a Scaleway Kubernetes Kapsule cluster on Scaleway’s CSI Github repository where you can find out how to use this feature. Please note, however, that it cannot handle volume resizing.

Kubernetes Kapsule supports the XFS file system on block storage. You can define a Storage Class to use it, as described below:

allowVolumeExpansion: trueapiVersion: storage.k8s.io/v1kind: StorageClassmetadata: labels: name: scw-bssd-xfsprovisioner: csi.scaleway.comreclaimPolicy: DeletevolumeBindingMode: Immediateparameters: csi.storage.k8s.io/fstype: xfsEven if Kubernetes Kapsule only officially supports Docker runtime, you can also use containerd and cri-o.

Note that, containerd ships with gvisor and kata containers for untrusted workloads.

Currently, the default runtime is Docker. However, we are planning to switch this default runtime to containerd to, on the one hand, prepare the deprecation of dockershim and, on the other hand, to benefit from containerd's simplicity and its lightweight implementation.

To redefine the runtime at pool level with the Scaleway CLI, you can use this command:

scw k8s pool create cluster-id=xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx \ size=1 name=test node-type=DEV1-M container-runtime=containerdYou will also need a runtime class:

apiVersion: node.k8s.io/v1kind: RuntimeClassmetadata: name: katahandler: kataor

apiVersion: node.k8s.io/v1kind: RuntimeClassmetadata: name: gvisorhandler: runscAt pod level, you need to use the associated handler: runtimeClassName: kata

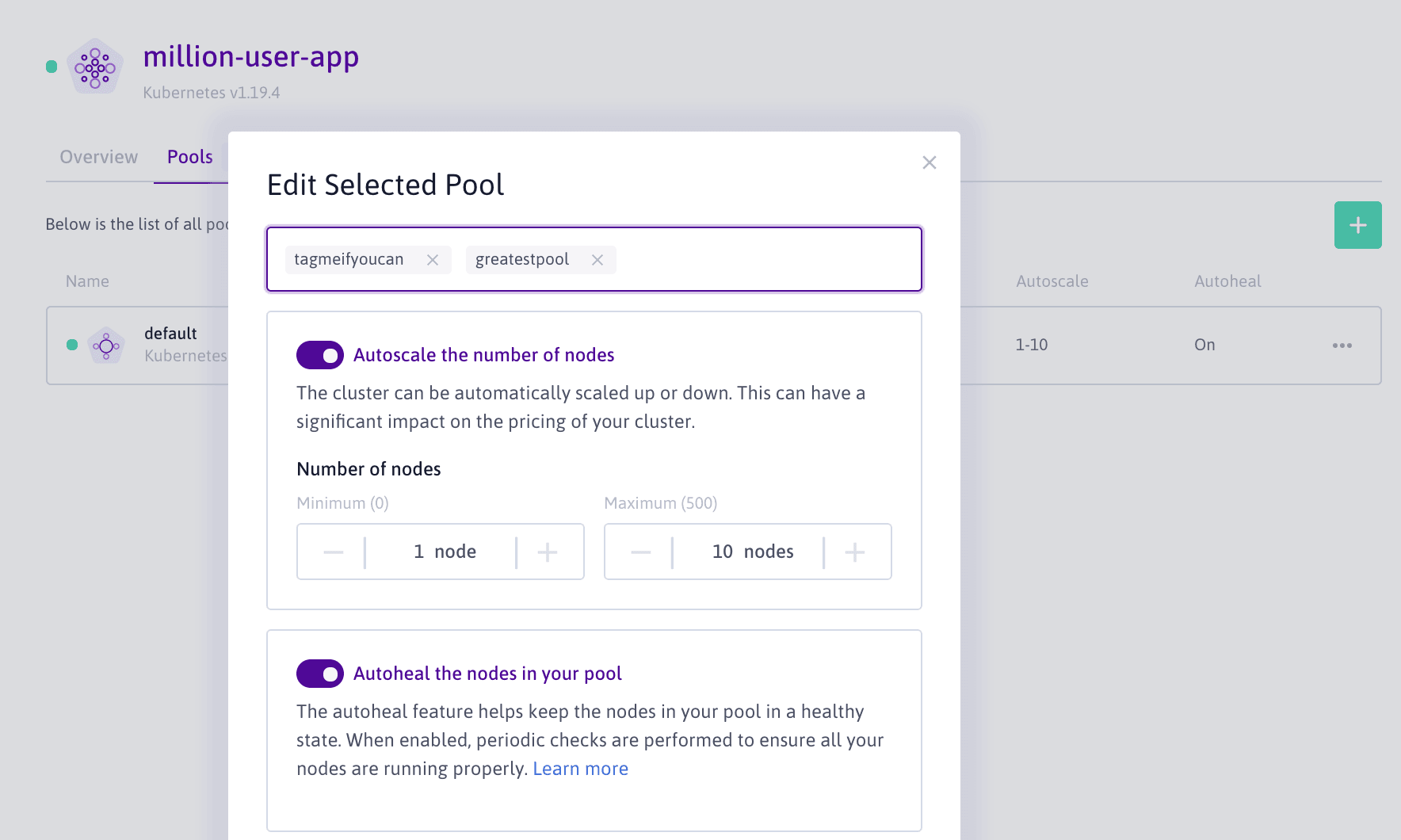

The AutoScaling feature provided by Kubernetes Kapsule allows you to set a maximum of parameters to manage your costs and configure your cluster with all the flexibility you need.

If you need more information about the Autoscaler, please have a look at the FAQ

You have the possibility of:

scale_down_disabled)scale_down_delay_after_add)estimator)expander)ignore_daemonsets_utilization)balance_similar_node_groups)expendable_pods_priority_cutoff)scale_down_unneeded_time)Here is how you can setup the autoscaler with the Scaleway CLI:

scw k8s cluster update xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx \ autoscaler-config.scale-down-delay-after-add=5m \ autoscaler-config.scale-down-unneeded-time=5m \ autoscaler-config.scale-down-utilization-threshold=0.7Tags set on Kubernetes Kapsule pools will automatically be synchronized with the pool nodes.

For instance, if you add the tag greatestpool on a pool, nodes within this pool will automatically be labeled k8s.scaleway.com/greatestpool:.

A tag such as greatestpool=ever will label nodes as k8s.scaleway.com/greatestpool: ever

Also, if you create a special pool tag named taint=foo=bar:Effect, where Effect is one of NoExecute, NoSchedule or PreferNoSchedule, it will create a k8s.scaleway.com/foo: bar taint with the specified effect on nodes composing the pool.

For instance, if you have a GPU pool, and a taint with a NoSchedule effect on it, pods that do not have a tolerance will not be scheduled on a node of that pool. So, only your “gpu pods with toleration” could be scheduled on that node.

Read more on taint/tolerations here and find more about Scaleway tag management.

You can specify an upgrade_policy.max_unavailable and an upgrade_policy.max_surge on each pool to control how it behaves on cluster upgrades.

max_unavailable meaning the maximum number of nodes that can be upgraded at the same timemax_surge being the maximum number of nodes to be created while the pool is upgradingTo set your upgrade policy with the Scaleway CLI, you can use the following snippet:

scw k8s pool update xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx \ upgrade-policy.max-surge=1 \ upgrade-policy.max-unavailable=3If you have not yet watched our expert Webinar on Kubernetes, get some popcorn and sit back:

To create a cluster and get started in minutes with Scaleway CLI, simply hit:

scw k8s cluster create name=production \ version=1.20.2 cni=cilium pools.0.node-type=DEV1-M \ pools.0.size=3 pools.0.name=default

Kubernetes Kapsule is a free service, only the resources you allocate to your cluster are billed, without any extra cost.

Discover how Mon Petit Placement, a french startup, migrated their infrastructure to the cloud, and more particularly on Kubernetes and how they made their legacy cloud-native.

This article will guide you through the best practices to deploy and distribute the workload on a multi-cloud Kubernetes environment on Scaleway's Kosmos.