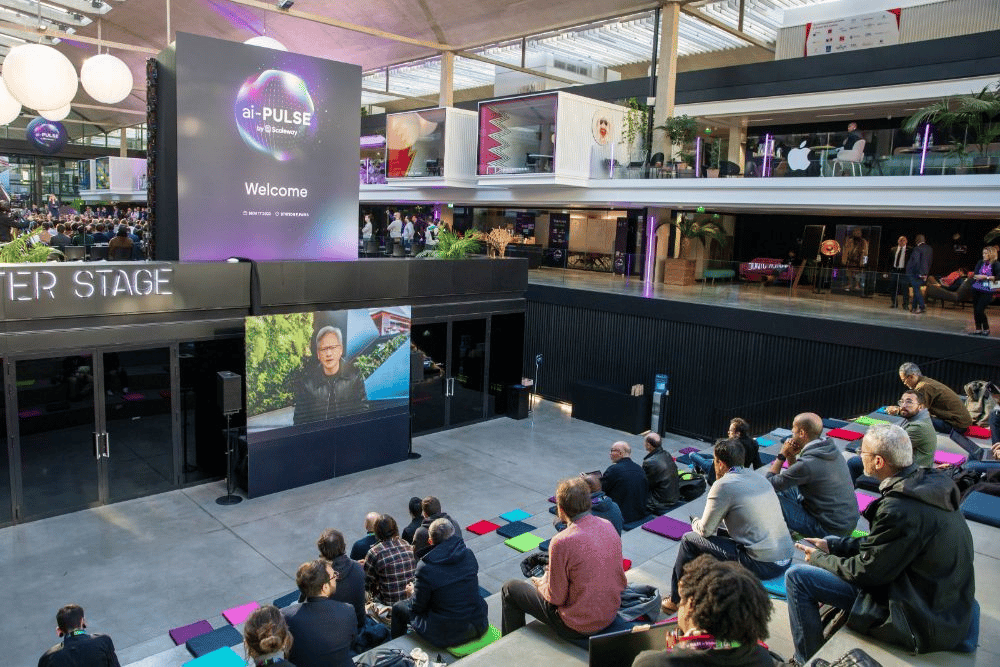

The first edition AI conference ai-PULSE, at Station F, November 17, was one to be remembered. Artificial intelligence experts joined politicians and investors to shape Europe’s first concerted response to US and Chinese AI dominance. Here’s a first sweep of the most headline-worthy quotes, before we take a deeper dive into these subjects later on. Enjoy!

Sovereignty, the key to tech’s latest global battle

If speakers and visitors of ai-PULSE agreed on one thing, it was the need for French and European AI. Even NVIDIA CEO Jensen Huang, whose GPUs power the world’s leading AI systems, said “Every country needs to build their sovereign AI that reflects their own language and culture. Europe has some of the world’s biggest manufacturing companies. The second wave of AI is the expansion of generative AI all around the world.”

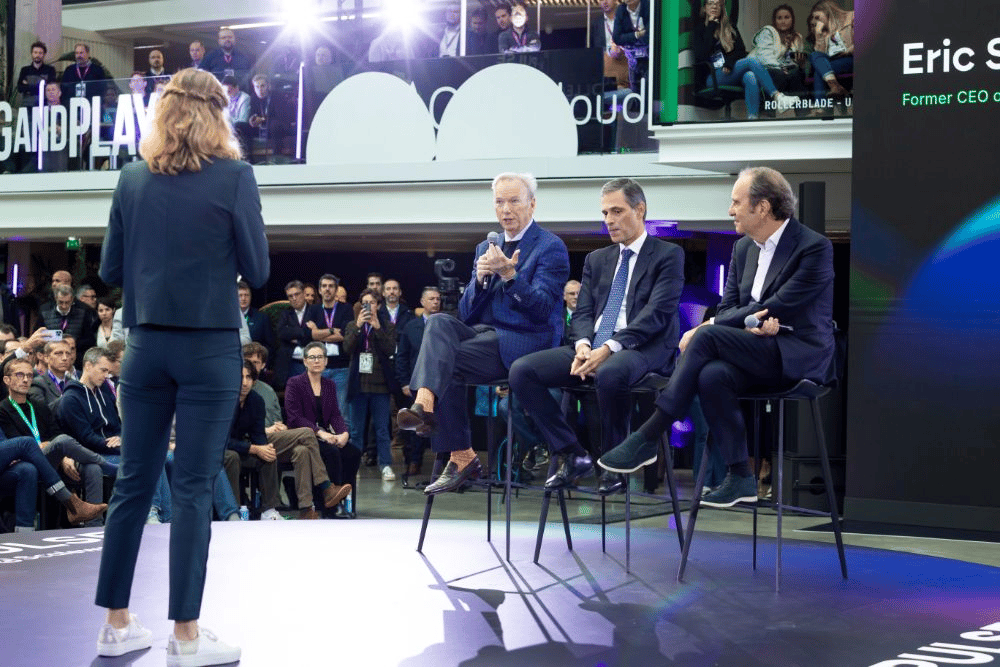

Eric Schmidt, who has an equally global view as former CEO of Google, agreed: “It’s obvious that France should be a leader in this domain. These people have got the tech right. We don’t fully understand how to make profound learning happen. For that you need lots of smart people, and a lot of hardware, which somehow Xavier [Niel, Group iliad Founder] has managed to arrange. So this is where AI is happening today.”

Niel himself went further when unveiling Kyutai, a new France-based AI research lab, a non-profit in which he, Schmidt and CMA CGM CEO Rodolphe Saadé have invested nearly €300 million. “I’d like us to talk about French AI imperialism!” enthused Niel in a mid-day press conference. “We want to create an ecosystem, like we did when we created 42, or Station F. The idea is for the whole world to advance positively. We can change the game; and lead it.”

French digital minister Jean-Noël Barrot was equally bullish about France’s AI prospects. “We may have lost some battles in the digital war, but we haven’t lost this one”, he said. “And we have quite an army, as I can see here today: Mistral, and many more, will help France lead and win this war.”

This enthusiasm was echoed by French President Emmanuel Macron, whose video address of ai-PULSE congratulated the decision to form Kyutai. “The commitment of the private sector alongside the public sector is absolutely key”, he said. “The fact you decided to invest at least €300 million in AI here, in order to educate, to keep, to train talents, to help, to increase our capacities, to increase our infrastructures, to be part of this game and to help France to be one of the key leaders in Europe is a very important moment. This initiative, and your conference, is not just to speak and to exchange views; it’s the start of a conference where people put [invest] money and people start deciding. And what I want us to do in months to come is precisely to follow up, and then to decide.”

Sovereignty has long been a key priority for Scaleway, so offering AI solutions within its data centers makes perfect sense, said the company’s CEO Damien Lucas, who earlier in the day announced his company’s new range of AI products (above). “When I joined Scaleway, I heard lots of clients complain about only having access to American solutions", he said in a press conference with Niel. "Why would people join Thales [S3NS] when it’s essentially rebranded Google? It’s our responsibility to offer services of the same quality. Let’s work towards being a plausible alternative in the most possible cases.”

Open source - and science - or nothing

What will be the exception française of this new hexagonal revolution? Open source, bien sûr! Whilst AI leaders such as OpenAI, and some GAFAMs, are famously opaque about how their models are created, and refuse to explain their systems’ decisions, the European models in the spotlight at ai-PULSE were by definition open to all; i.e. free, and ready to copy and retrain at will, for the greater good of the sector.

Niel was also emphatic on this point. “These [Kyutai's] models will be available to everyone, even AWS!” said the owner of cloud providers like Scaleway or Free Pro. All of Kyutai’s findings will be published “in open science, which means the models’ source code will be made public. It’s something GAFAM is less and less tolerant of. Whereas we know that scientists need to publish. There is no business objective or roadmap. Do we want our children using things that weren’t created in Europe? No. So how do we obtain things that suit us better? ChatGPT’s initial budget was €100m per year. But we’re going to benefit from open source on top of that.”

Huang was very much aligned: “I’m a big fan of open source,” said NVIDIA’s leader. Without it, how would AI have made the advances it has in recent years? Open source’s ability to pull in the engagement of all types of companies keeps the ecosystem innovative… and safe, and responsible. It allows 100,000s of researchers to engage with (AI) innovation.”

This is precisely why President Macron wants open source “to be a French force”... and Barrot expressed his support for “models that are open, and so open source, as that stimulates innovation.”

“Open source is the way society moves fast,” affirmed Schmidt. “Most of the platforms we use today are basically open source. My guess is the majority of companies here will build closed systems on top of open ones.

Resource optimisation: how to win the AI war

The majority of experts in ai-PULSE’s afternoon sessions are indeed working on open source solutions. One of their key recurring themes was how to optimize AI models’ resource usage. Because the winners in this race will be those that can do more with less. In other words, you don’t necessarily need a sports car to win this race.

“You thought you were using a supercluster in a Ferrari, but in the end, you also divided its capacity to move several subjects forward at Dacia speed. Proving that you learn by doing!" said Guillaume Salou, ML Infra Lead at Hugging Face, one of the world’s most resource-aware AI companies, prior to his session on the importance of benchmarking large AI clusters (above).

Fellow French AI star Arthur Mensch, CEO and Co-Founder of Mistral also insisted on the importance of resource optimization. “In the ‘Vanilla Attention’ version of Transformers, you need to keep the tokens in memory”, he explained. “With Mistral AI’s ‘Sliding Window Attention’ model, there are four times less tokens in memory, reducing memory pressure and therefore saving money. Currently, too much memory is used by generative AI”. This is notably why the company’s latest model, Mistral-7B, can run locally on a (recent) smartphone, proving massive resources aren’t always necessary for AI.

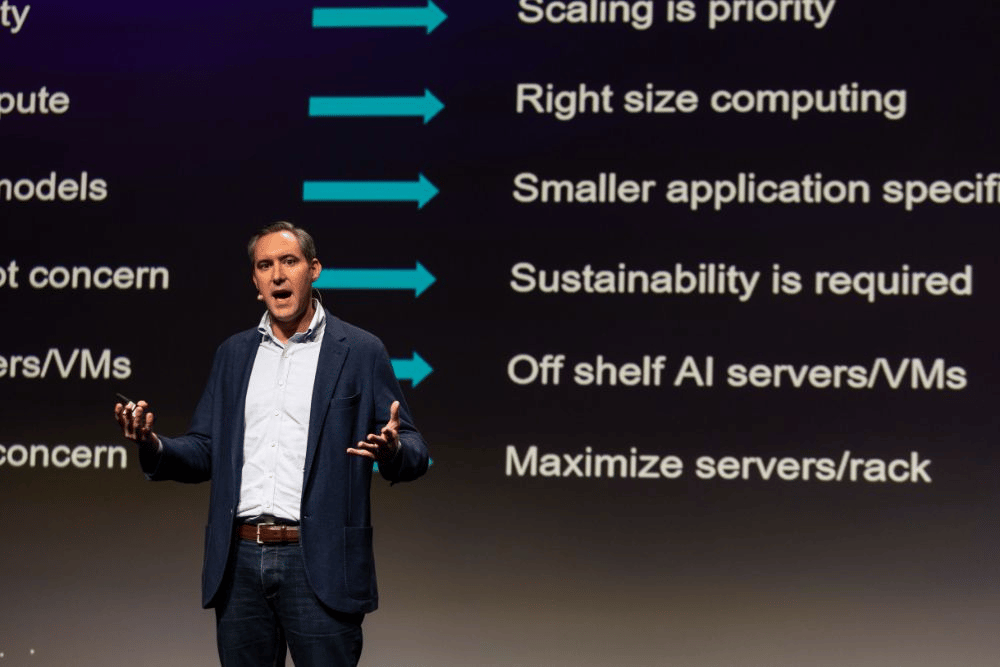

Jeff Wittich, CPO of chipmaker Ampere, also shared that you don’t always need Ferrari-level GPUs for all AI work. Especially considering, as he pointed out, just one NVIDIA DGX cluster uses 1% of France’s total renewable energy…

“Globally, 85% of AI computing is inference, versus 15% for training”, said Wittich. “So you need to right-size the AI compute to maximize cost effectiveness. For smaller models or computer vision, a CPU-only server is often the right choice. We’ve even seen amazing results, up into 7 to 10 billion-parameter models running on CPUs.” Major cloud energy and cost savings ensue, promised Wittich: Ampere client Lampi, for example, gained 10x speed performance results for one tenth of the cost of using a x86 processor. More in our interview with Wittich, here.

But what if the entire sector shifted to power-hungry models like GPT-3 or -4? This could lead to AI consuming as much energy as Holland as early as 2027, Scaleway COO Albane Bruyas pointed out on the “Next-Gen AI Hardware” panel (above), citing research by Alex de Vries. “The worst thing you can do is have machines wasting power by being always on,” said James Coomer, Senior VP for Products, DDN. “[NVIDIA’s] Jensen Huang has the right idea. We have to do accelerated computing, which means integrating across the whole stack so that the application is talking to the storage, the storage to the network…”, i.e. the whole system constantly regulates itself to optimize its energy consumption.

Food for thought…

A Taste of what’s to come

Which is all well and good: but what is this amazing technology really capable of today, and in the near future? Whilst Kyutai’s Neil Zeghidour said his organization’s objective was to “create the next Transformers” (the AI model now omnipresent today), Poolside gave a particularly enticing glimpse of a future where code could effectively write itself.

According to Eiso Kant, Co-Founder & CTO of this fascinating US company which recently relocated to Paris, “when you have a LLM (large language model) you’re training, you’re teaching it about code, by showing it lots of code. But when you show it how problems are solved (via our sandbox of 10k codebases) you’re teaching it how to code. In the next 5 years, all AI models will come from synthetic data - i.e. data made by another AI - so you’ll end up with code that’s entirely not made by humans.”

This was precisely one of the predictions of Meta Research Scientist Thomas Scialom: “Soon, you can expect LLMs to make their own tools, because they(‘ll) have some ability to code. That’s a whole new universe for research. If, for example, I want some code to, say, lower-case all my text, the model generates a code to do that. But now, it can execute the code, see what an input gives and [compare it with] the output from the real world, grounded in code execution. Then the LLM can reflect [on] its own expectations”... and effectively learn to code.

So further off in the future, will these models be “just stochastic parrots generating text, or are they truly understanding what’s beneath the data?” asked Scialom. It’s hard to say, he concluded, but one thing’s for sure: “we can put more compute in the smaller models, in the bigger models, and we will have better models with the same recipe in five years’ time.”

To find out more about Scaleway’s AI solutions, click here; or to talk to an expert, click here.

Watch all of the day's sessions on our YouTube channel

& stay tuned for more ai-PULSE content soon!