Infrastructure as Code

Infrastructure as Code (also known as IaC) is a type of configuration that automates the managing and provisioning of the infrastructure through code - without going through manual processes.

In the last 48 hours, several Scaleway teams were on the front line to launch, in less than a day, an integral videoconferencing solution: Ensemble.

Free, open-source and sovereign, Jitsi VideoConferencing powered by Scaleway will be available for the duration of the Covid-19 crisis!

You will be able to use this solution to keep in touch with your family and friends, maintain your business, interact with your customers, meet your patients or prepare your exams with other students.

Monday 8am we decided to join the collective effort against #COVID19. By deploying Jitsi Meet, an open-source video conferencing solution providing secured virtual rooms with high video and audio quality, on more than one hundred Scaleway instances, we aimed to facilitate remote communication for all amid the COVID-19 pandemic.

In a nutshell, ensemble.scaleway.com aims at providing Jitsi servers. The number of people in need of a scalable videoconference solution being very high at the moment, it was our responsibility to find an alternative that was able to handle a significant load of video bridge requests.

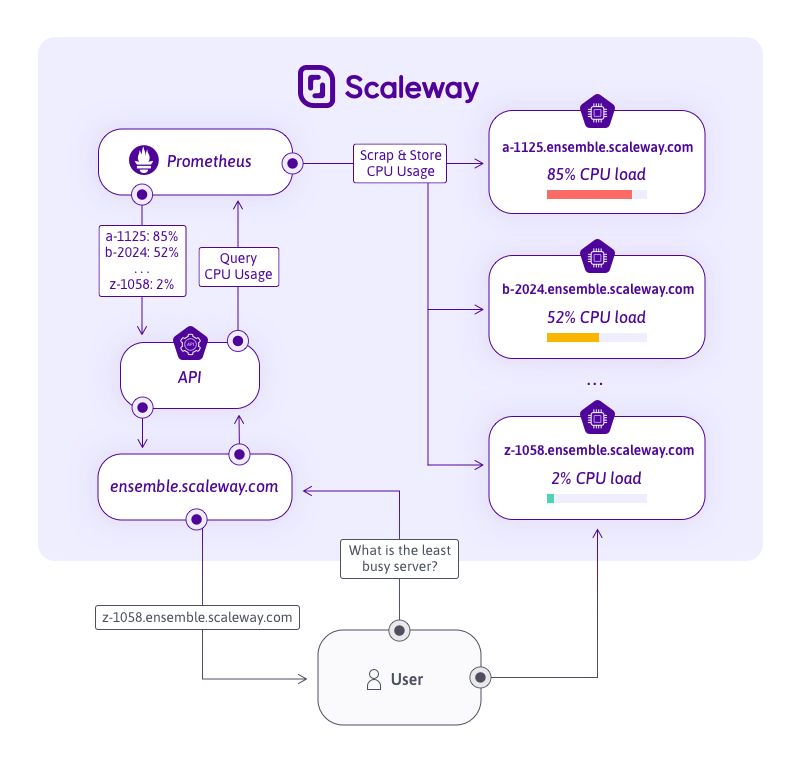

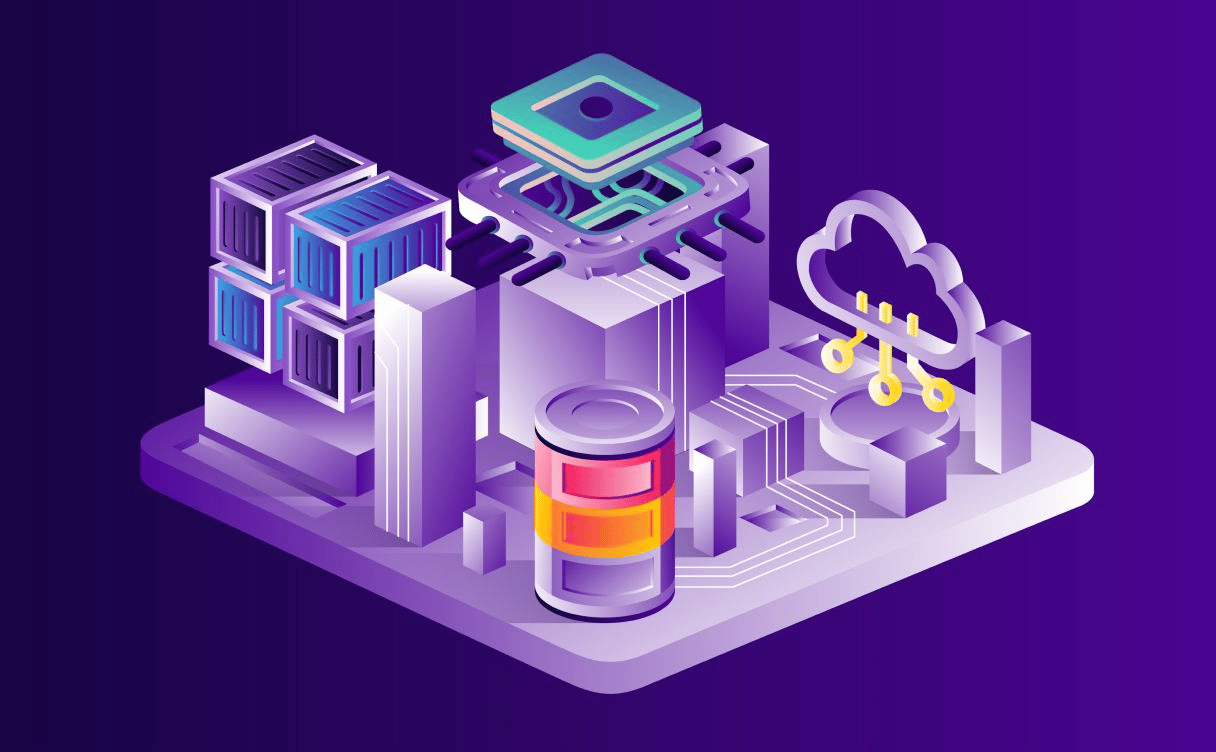

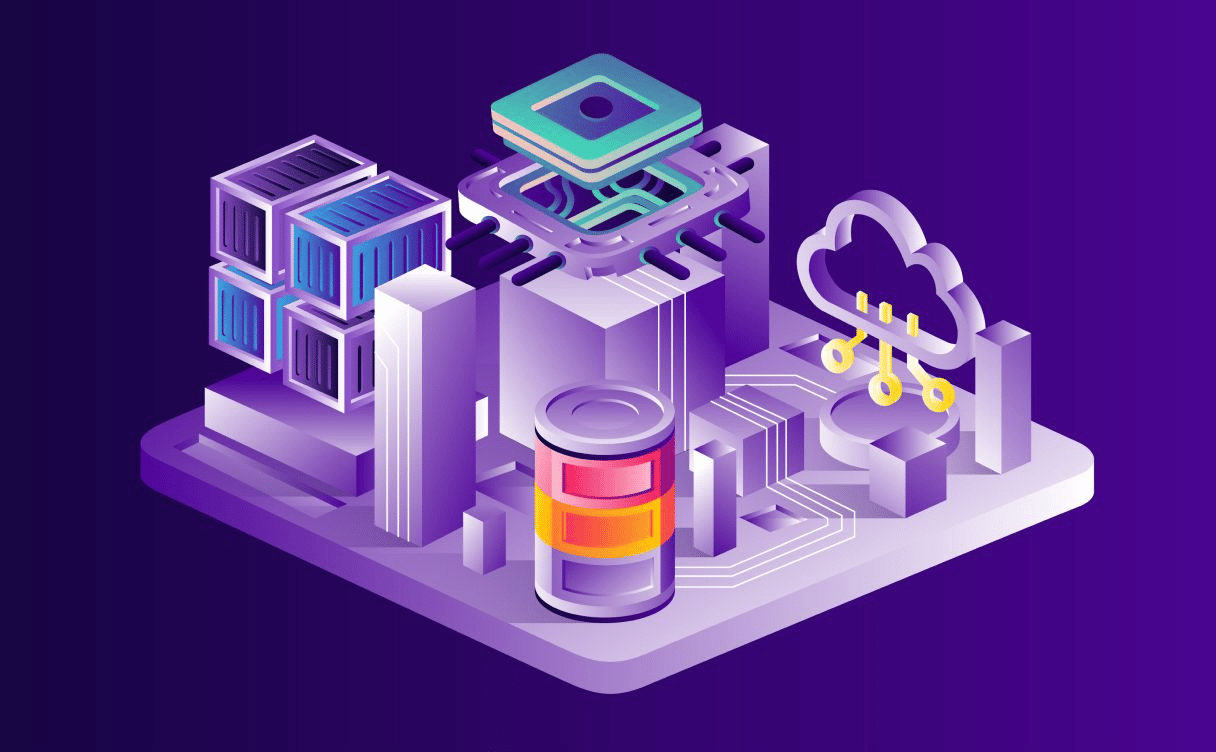

As shown in the architecture diagram below, all Jitsi instances are constantly monitored to keep track of their capacity. This allows us to ensure that each user is provided with the least-used instance to create a virtual room and start a call.

The stateless API is composed of a front website in React and an API that will query a Prometheus (every 30 seconds) to get a list of all the Jitsi servers available and their current CPU usage.

The web application then selects the Jitsi server that has the most CPU available and returns the URL to the user. With that URL, a user can easily connect to the Jitsi server and start enjoying the call with an optimal sound and video quality.

All Jitsi servers are deployed on Scaleway Instances which can hold a large number of concurrent video bridges.

Now that we explained the general architecture and the typical user workflow of this application, let's see how it is deployed using infrastructure as code technologies.

Terraform is an infrastructure tool that manages cloud resources in a declarative paradigm. We decided to use the Scaleway Terraform Provider to manage all our infrastructure from a single versioned place. All changes applied to our infrastructure are tracked in a git repository.

To ensure consistency across concurrent Terraform execution, the terraform state is persisted in a Scaleway Database PostgreSQL managed instance. For that, we used the pg backend in Terraform.

We created all the required instances to make this application run:

DEV1-L type). These instances run the Jitsi videoconference solution.These constitute the infrastructure of ensemble.scaleway.com. Now we are going to complete this Terraform module by enabling those instances to serve our application.

When creating an instance, you have to select or create an image. In each cloud deployment, instances are booted with a specific cloud image that is designed to meet the specific requirements of the instance.

First, we created a base image called base, which was the starting point for all the others. On this base image, we installed the requirements to run containers with Docker, docker-compose and a node_exporter that is used by our Prometheus monitoring system to know, among other information, the CPU usage of the machine.

From the base image, we then created a Jitsi image using the official docker compose distribution: docker-jitsi-meet. We also added an Nginx Prometheus exporter on docker-jitsi-meet docker-compose for monitoring purposes.

When a Jitsi instance boots with this image, a docker-compose will start and the Jitsi server which is running as a container will automatically start working as well.

Note that the base and Jitsi images are created with Ansible playbooks. It allows to easily recreate images when needed.

Finally, we created a front container image which gathers the web application code (React) and the API code (Node.js). This image will run inside containers that docker-compose will pull from a private Scaleway registry.

The base image aims at providing the system-wide requirements such as the operating system and other basic components as docker, docker-composes, node_export... However, we needed to be able to deploy new versions of our applications without rebooting an instance with a new image. As a result, we decoupled the base image from the application that was containerized.

The API and the React website which are bundled in the same container image are hosted on a Scaleway private registry. Once stored on the registry, images can be pulled in the instance by the docker daemon controlled by docker-compose to run the application. That comes in very handy when we need to deploy a new version of our API after a bug fix or a feature enhancement as we only need to push the new container image to the registry and tell docker-compose to use the new version.

Now that our applications are deployed, let's see how we can make our API server reliable using a Load Balancer.

Load Balancers are highly available and fully-managed instances that allow to distribute the workload among your various services. They ensure the scaling of all your applications while securing their continuous availability, even in the event of heavy traffic. Load Balancers are built to use an Internet-facing front-end server to shuttle information to and from backend servers. They provide a dedicated public IP address and forward requests automatically to one of the backend servers based on resource availability.

In the context of Jitsi, we used our Load Balancer to automatically forward requests to our API servers based on resource availability. Our API servers are the ones providing information about the current load of each Jitsi sever to ensure that the user is provided with the most available instance.

Load Balancer can also allow us to add more API instances if the existing API instances are too busy to handle the load. In addition, they can evict a faulty API server in case it is not able to answer requests anymore for whichever reason. We even added an extra reliability guarantee on our API instances by using Scaleway Placement Groups.

Placement Groups allow you to organize instances into groups, distributing the load, and ensuring maximum availability. Placement groups have two operating modes. The first one is called max_availability. It ensures that all the compute instances that belong to the same group will not run on the same underlying hardware. The second one is called low_latency and does the exact opposite: it brings compute instances closer together to achieve higher network throughput. In the context of our application, we want to ensure that the API servers and Jitsi servers are as available as possible.

We enable the max_availability mode across our two API servers so they are not on the same underlying hypervisor. Now that we have a reliable deployment of our API server, let's see how to secure the connection to the different ports available on those machines using Scaleway Security Groups.

Security groups enable you to create rules that either drop or allow incoming traffic from or to certain ports of your server. Typically it establishes a barrier between a trusted (internal) network and untrusted external network, like the Internet. The security group configuration is based on a set of inbound and outbound rules. We applied security groups to all the components of our architecture.

On the API instances, we only allowed HTTPS/HTTP connection and SSH remote access connection.

On the Jitsi instances, only SSH and ports that are required for Jitsi to work are allowed. All the others are blocked.

Let's complete this deployment by adding human understandable domain names for each component of this application.

Finally, we wanted to manage the DNS record for each of the instances: API, Jitsi servers and Prometheus). DNS records make a domain name such as h-5660.ensemble.scaleway.com resolve to the correct Jitsi instance across all users.

We use Scaleway domains to manage the DNS record for the whole ensemble.scaleway.com solution. When provisionning a new Jitsi server in Terraform, we automatically generate a DNS record for this Jitsi instance.

We generated a wildcard certificate for all subdomains of ensemble.scaleway.com. Each Jitsi server gets its certificate that is used by their Nginx server to handle HTTPS connections.

While currently in early access, you can already register for Scaleway Domains .

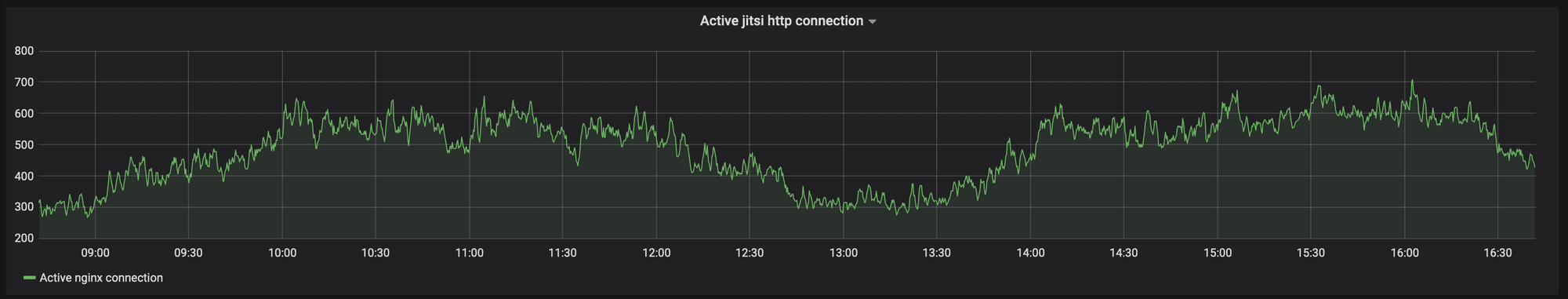

The ensemble.scaleway.com solution was built and deployed in a flash thanks to joint forces, and the first reactions are already very positive. The solution is widely used and the number of rooms created keeps increasing. In the last 8 hours, we monitored more than 800 active Jitsi connections, 1700 Jitsi rooms created and more than 6000 page views of ensemble.scaleway.com from all over the world.

We are still working actively on this project to provide support to as many people as possible in this challenging period. In particular, we will work to make this project and the code used to create this infrastructure available to all as soon as possible.

In the meantime, if you would like to set up your own Jitsi server, feel free to check our tutorials on how to install Jitsi on your server, whether you are using Debian 10 or Ubuntu 18.04.

Infrastructure as Code (also known as IaC) is a type of configuration that automates the managing and provisioning of the infrastructure through code - without going through manual processes.

In this article, we will review the different tools we use and how our product teams are using them to deliver your cloud resources as efficiently as possible

In this final article of our Infrastructure-as-code series, we will see what kind of infrastructure-as-code tooling you can use with your Scaleway resources.