Serverless: 1 year in, and we’re just getting started

Scaleway’s Serverless ecosystem is one year old today—the perfect occasion to tell you all about how Serverless was built, and the new features planned for the year ahead.

This year, we will expand our Compute range to deliver you the best price-performance ratio on the market, enabling you to tackle even the most complex workloads easily. From powerful GPU Instances to new ARM-based processors, we're excited to share with you what we have in store.

We are bringing back ARM Instances this year with AMP2! AMP2 are Virtual Machines based on Ampere's first ARM processor built for the cloud native, the Altra Max. This new 64-bit ARM processor is robust and powerful, with predictable performance and one of the best energy efficiencies on the market.

AMP2 is being released by Scaleway Labs, meaning we’re involving customers earlier in the development process than we usually do. For those willing to brave the unknown, early tester feedback will be critical in helping to shape our ARM strategy in the coming years.

AMP2 is not meant for production, won’t benefit from traditional support level, and may not be suited for mission-critical workloads; however, if you’re as excited as we are to bring ARM back to the Scaleway ecosystem, we’d love to have you as an early tester.

👉 Sign up for the AMP2 waiting list

The NVIDIA® H100 GPU is purpose-built for AI training and inference, scientific computation, and data analytics applications. These instances are ideal for training, iterating, and going to production faster, unlocking new possibilities for your AI workloads. This product is a true game-changer in its own right, and for the Scaleway ecosystem it will unlock use cases with much larger datasets than have been previously available on our GPU products.

The H100 GPU Instances are built to handle the most demanding workloads, enabling customers to train, iterate, and go to production faster. The NVIDIA® H100 GPU is the most powerful GPUs available on the market today, and it is optimized for deep learning and AI workloads. With up to 80 GB of GPU memory, the H100 GPU Instances can efficiently process large datasets and run complex algorithms.

100+ AI Startups have already started building on top of Scaleway’s ecosystem, and we think the H100 GPU Instances will be a great reason for hundreds more to come discover Scaleway. As AI is becoming a central part of our society's discussion and evolution, we are already looking forward to seeing what you will build on H100 GPU Instances.

👉 Sign up for the H100GPU Instance waiting list

Our Workload-Optimized computing ranges are designed to streamline your workflows and optimize your performance. These more specialized alternatives of our Production-Optimized range come equipped with dedicated vCPUs and adjusted vCPU:RAM ratios, optimized for intensive workload applications:

👉 Sign up for the Workload-Optimized range waiting list

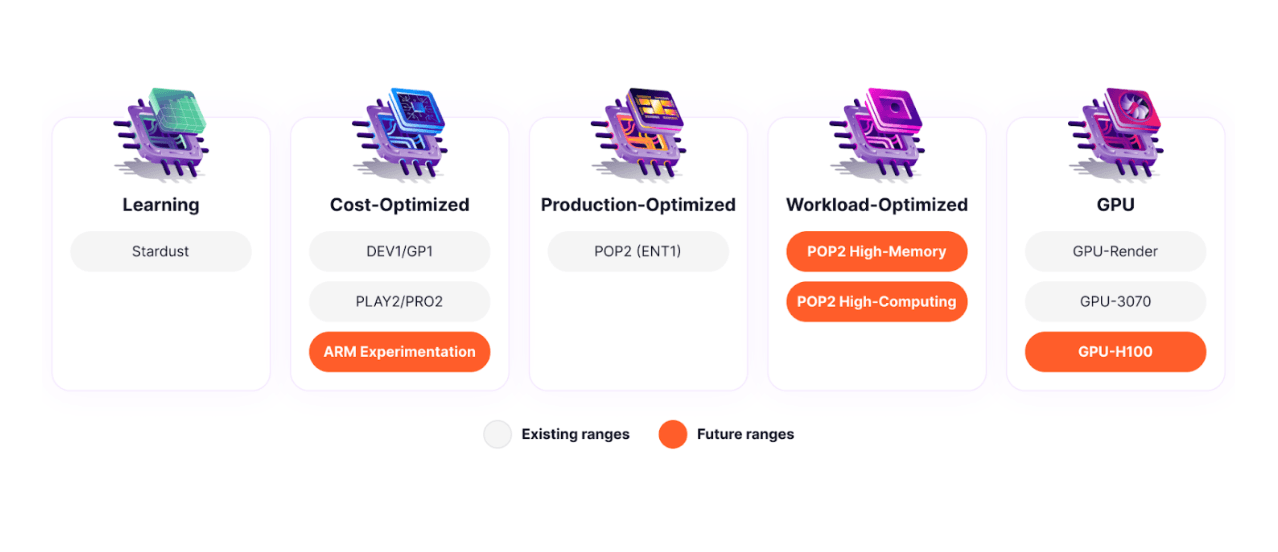

With the addition of these new incoming products, Scaleway supports several types of instances, each with its own set of resources, unique value propositions, and technical specifications:

Learning

The Instances from the Learning range are perfect for small workloads and simple applications. They are built to host small internal applications, staging environments, or low-traffic web servers.

Cost-Optimized

The Cost-Optimized range balances compute, memory, and networking resources. They can be used for a wide range of workloads - scaling a development and testing environment, but also Content Management Systems (CMS) or microservices. They're also a good default choice if you need help determining which instance type is best for your application.

Production-Optimized

The Production-Optimized range includes the highest consistent performance per core to support real-time applications, like Enterprise Instances. In addition, their computing power makes them generally more robust for compute-intensive workloads.

Workload-Optimized

Expanding the Production-Optimized range, the Worload-Optimized range will be launched in the near future and will provide the same highest consistent performance than the Production-Optimized instances But they will come with the added flexibility of additional vCPU:RAM ratio in order to perfectly fits to your application’s requirements without wasting any vCPU or GB of RAM ressources.

As a hardware company, we’re excited to provide developers with a range of new options for running their workloads in the cloud. We’re committed to building the ideal backbone for European startups to scale sustainably.

But we’re first and foremost a software company.

We know that the tools and platforms you use to build your applications are as important as the underlying hardware. That’s why we’re also working to consistently improve our Serverless offering (you can find our public roadmap here) and our managed Kubernetes products, Kapsule and Kosmos, to provide you with everything you need to build, deploy, and scale your applications.

We are building our products to cater to the infrastructure of the most ambitious startups: come tell us what you are building on top of them!

Scaleway’s Serverless ecosystem is one year old today—the perfect occasion to tell you all about how Serverless was built, and the new features planned for the year ahead.

Kubernetes Kapsule is a free service, only the resources you allocate to your cluster are billed, without any extra cost.

Some features may be hard to find and used only by most advanced users. We thought you might be interested in going further and optimizing your use of Kubernetes Kapsule