Advanced Kubernetes Kapsule features you might have missed!

Some features may be hard to find and used only by most advanced users. We thought you might be interested in going further and optimizing your use of Kubernetes Kapsule

In today’s economic context, agility and continuous improvement are essential drivers of success. Every organization, regardless of its mission, relies on a strong technological foundation, increasingly bolstered by artificial intelligence (AI) solutions.

As a key player in the French market, Golem.ai offers a range of products, spearheaded by Inboxcare, designed to optimize customer relations for businesses by integrating AI models to ensure fast and efficient processing of incoming messages.

As a Scaleway Cloud customer, Golem.ai's infrastructure relies on managed services, including Kubernetes and managed databases. Kubernetes, as highlighted in recent reports from the DoK community, has established itself as a key tool for integrating AI solutions, thanks to its advanced workload orchestration capabilities and CI/CD pipeline automation. By optimizing the lifecycle of containers, central to this ecosystem, Kubernetes enables efficient and high-performing resource management.

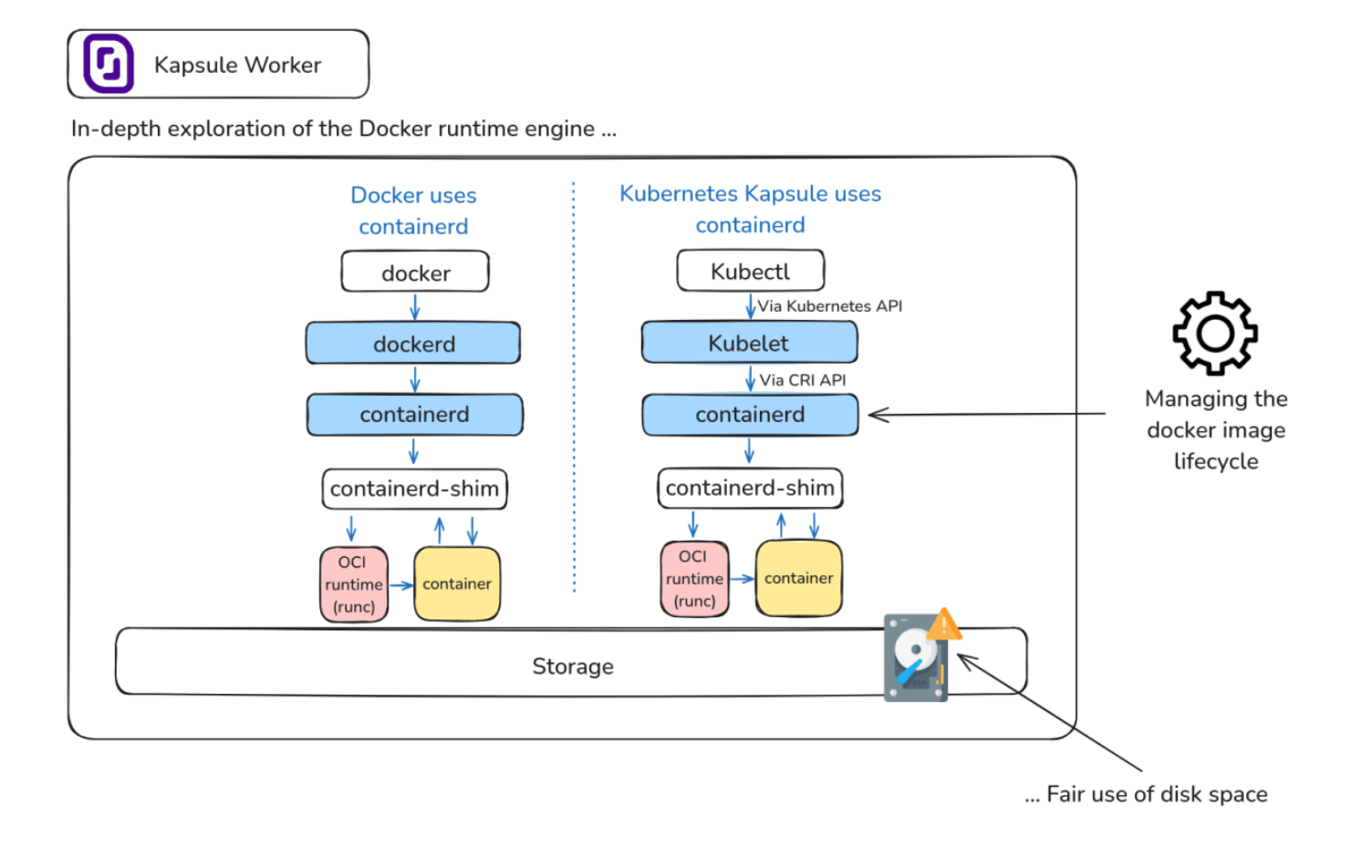

In this article, we explore a method implemented by Golem.ai to optimize disk space on Kapsule, Scaleway's managed Kubernetes cluster solution. This practice is based on intelligent management of images in the underlying system, which operates with the Containerd runtime, and serves as a valuable recommendation for improving resource management in containerized environments.

As a reminder, Kapsule users have direct access to the file system, but it is not recommended to manipulate it, for example, by connecting to the node via SSH. It is also important to consider that, for performance and application startup acceleration, especially in the context of Horizontal Pod Autoscaling through the use of KEDA, the container runtime will prioritize using images already present in the cache rather than downloading them from a container registry. This solution is also beneficial for increasing the availability of images in the event of a container registry incident.

But what should be done with images that remain stored on a node and gradually take up a significant percentage of disk space over time ?

One option could be to increase the disk space of block storage nodes to store more images. However, this solution would incur additional costs and operational risks for Golem.ai's production environment, all to host outdated and unnecessary images.

At Golem.ai, the lifecycle management of images on nodes is automated through a process that lists and removes unused images after a certain period. This process interacts directly with the container runtime, Containerd, from a pod, as illustrated in this article. Its goal is to keep up with the fast-paced deployment schedule, with multiple production releases per day, which consumes several gigabytes of storage across our various environments.

This lifecycle management approach frees up disk space, prevents node saturation, optimizes resource usage and reduces the cloud cost, both budgetary and environmental, by reducing the carbon footprint — a common challenge in cluster management.

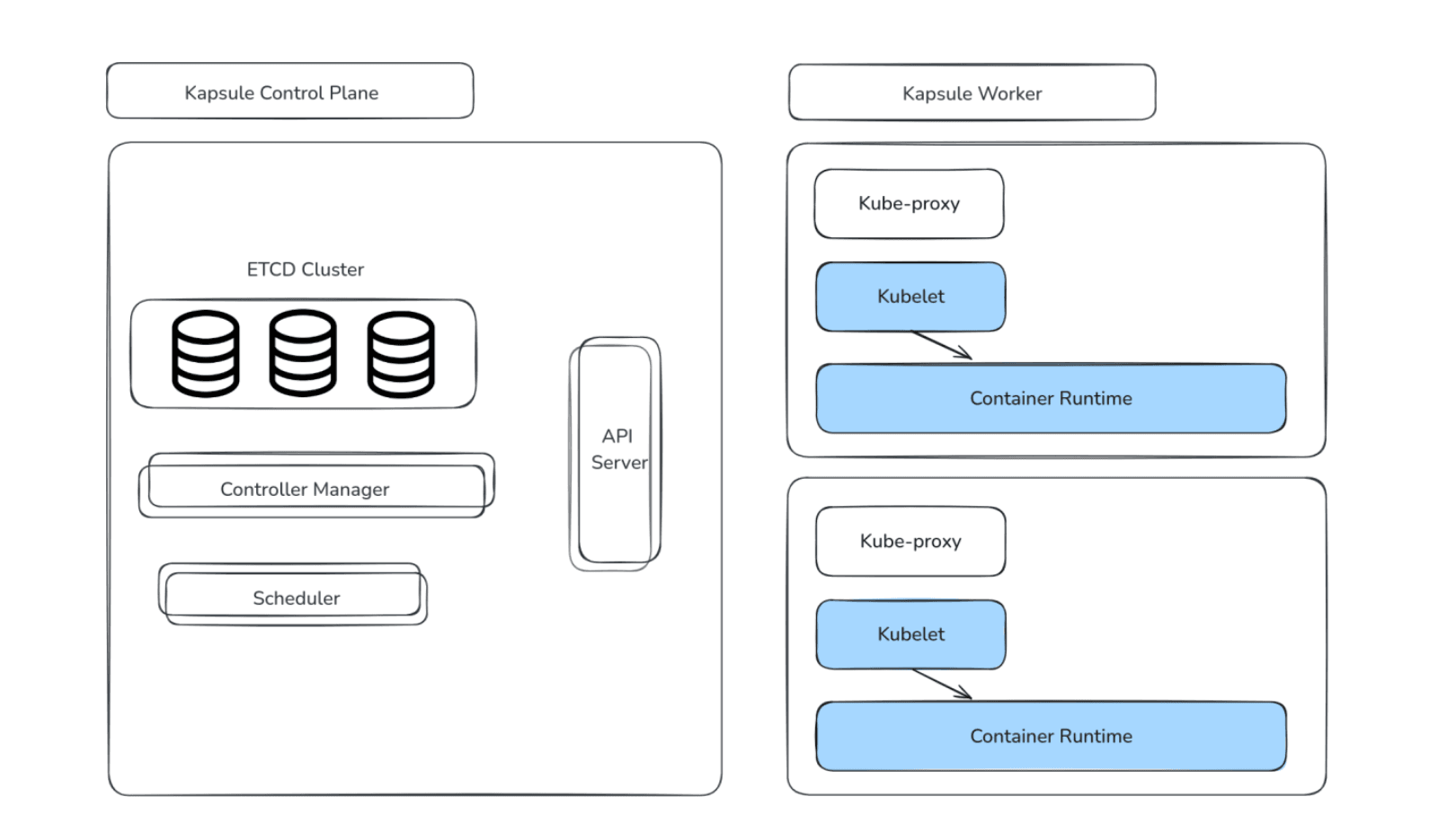

Here is an overview of a Kapsule cluster, consisting of the Control Plane (Master) and the nodes (Workers) on which the workloads are executed.

The container runtime (Containerd) used by Golem.ai on the Kapsule worker nodes applies a process of scanning images and removing those that do not comply with the lifecycle policy.

During the scan performed every day at midnight, Golem.ai's cleanup process removes all unused images that were created more than 30 days ago[1].

This operation applies only to images from Golem.ai's GitLab Registry or the backup Registry created on Scaleway Registry, which serves as a fallback solution in case of a GitLab Registry failure.

To do this, the cleanup process uses a volume mounted in the pod executing the scan, with the path on the host corresponding to the Containerd runtime socket, as well as two environment variables configured to allow the crictl CLI, to communicate with this mounted socket.

volumes: - name: containerd hostPath: path: /var/run/containerd/containerd.sock type: Socket -------- env: - name: CONTAINER_RUNTIME_ENDPOINT value: unix:///var/run/containerd/containerd.sock - name: IMAGE_SERVICE_ENDPOINT value: unix:///var/run/containerd/containerd.sock volumeMounts:https://kubernetes.io/docs/concepts/architecture/garbage-collection/#containers-images- name: containerd mountPath: /var/run/containerd/containerd.sock The recurring task runs every day at midnight across all nodes of the different cluster pools, using a DaemonSet configured with a ConfigMap to properly set up the Cron job.

apiVersion: v1 kind: ConfigMap metadata: name: imgcleanupcjcm namespace: ops-tools labels: component: imgcleanup data: # Removing unused images from registry.gitlab.com/golem-ai or rg.fr-par.scw.cloud, which is 30 days old. cronjobs: 0 0 * * * cd /tmp/ && bash imgcleanupscript.sh The entire setup is available on Golem.ai's public GitHub.

https://github.com/golem-ai/clean-docker-image-kapsule-scaleway

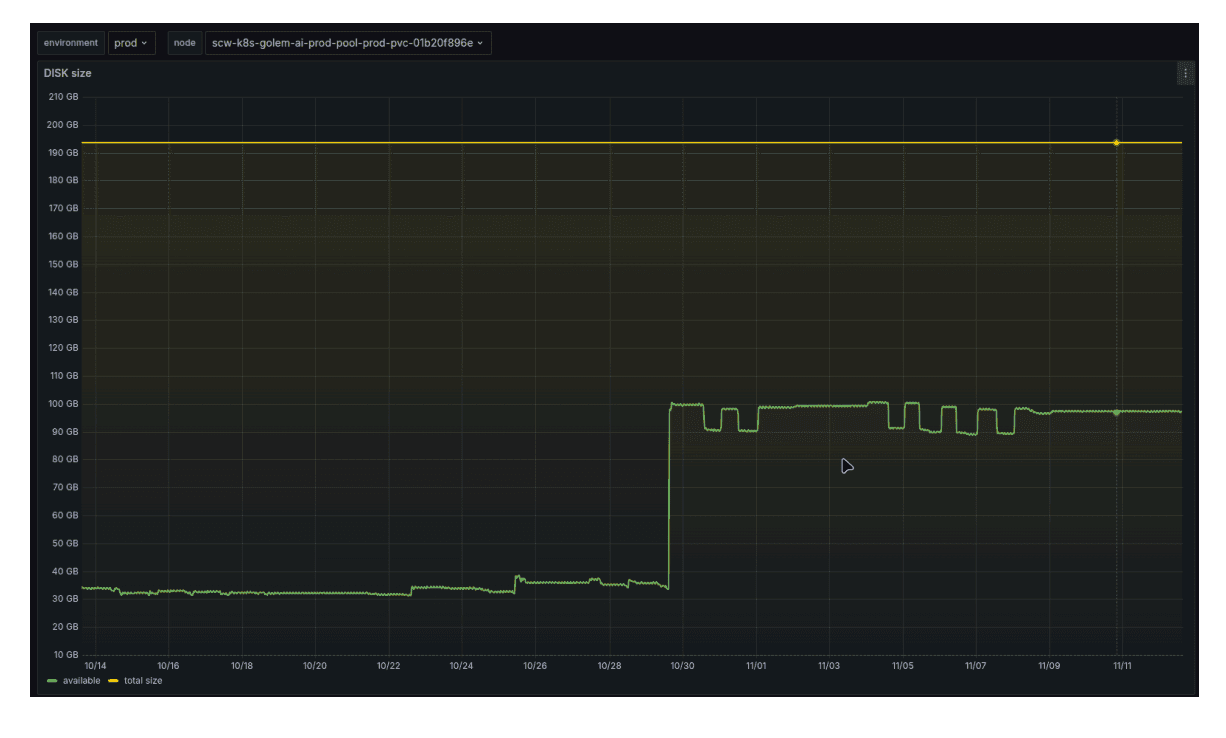

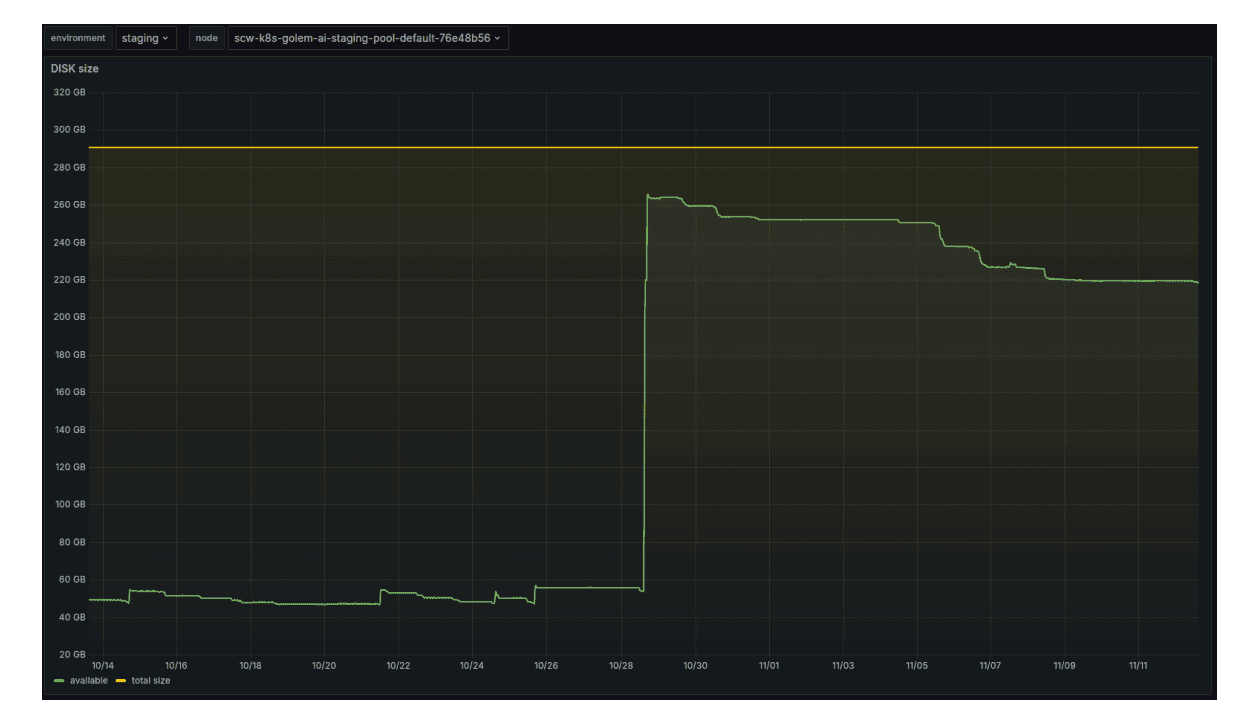

A threefold increase in available storage on the Production and PreProduction machines, with no impact on client workflow processing: as shown in the image above, marked by the green line, the disk space increase is approximately 250 GB for all Production machines and 350 GB for PreProduction machines.

In the Staging environment, the result is even more noticeable, with a fourfold increase in freed storage space (the image below), allowing for the release of approximately 1 TB of disk space across all staging machines.

Golem.ai benefits from an automated O&M (Operational Maintenance) system, designed to efficiently serve its clients and development teams. This system enables significant savings in time and money across the entire value chain, while ensuring optimal processing of its clients' valuable workflows.

The approach described in this article is based on rigorously developed practices aimed at maximizing performance and efficiency—key elements for AI solutions. It not only meets the needs of businesses seeking high-performing infrastructures but also aligns with a broader commitment to sustainability and responsibility.

For further reading :

Forsgren, Nicole, Jez Humble, and Gene Kim, Accelerate: The Science of Lean Software and DevOps: Building and Scaling High Performing Technology Organizations. IT Revolution Press, 2018.

Data on Kubernetes 2024: Beyond Databases: Kubernetes as an AI Foundation, Research Report, November 2024

Francisco Javier Campos Zabala, Grow Your Business with AI: A First Principles Approach for Scaling Artificial Intelligence in the Enterprise, Apress 2023

[1] The method proposed in this article is complementary and is not part of the default Kubelet cleanup process. As of the publication date of this article, no stable version of Kubelet allows for configuring the cleanup of container images based on their creation date or their origin registry. Source

Some features may be hard to find and used only by most advanced users. We thought you might be interested in going further and optimizing your use of Kubernetes Kapsule

Kubernetes Kapsule is a free service, only the resources you allocate to your cluster are billed, without any extra cost.

Kubernetes and Docker have changed the game when it comes to software deployment. But beyond the buzz, do you understand the difference between them?