A simple definition of Kubernetes

Kubernetes (K8s) is an open-source platform for managing containerized workloads and services. Google, one of the historic public cloud providers, initially developed the project and made it publicly available in 2014. Originally designed to manage Google's massive internal infrastructure, Kubernetes has since been adopted and further developed by several public cloud providers and independent developers, expanding its capabilities and features.

Since then, Kubernetes has developed a vast and rapidly growing ecosystem. The project's source code is available in its GitHub repository, encouraging collaboration and contributions from developers worldwide.

The name Kubernetes derives from the ancient Greek word meaning helmsman or pilot. This article explains the concept and the different compartments of Kubernetes.

Practical benefits and use cases

What is Kubernetes best used for?

Kubernetes is best used to automate deployment, scaling, and application container operations. It’s ideal for microservices-based applications, CI/CD pipelines, and environments where resilience and scalability are critical. Kubernetes offers self-healing capabilities, automatically restarting failed containers and rescheduling them on healthy nodes.

Why should I use Kubernetes in my infrastructure?

Kubernetes provides a consistent, predictable environment for running applications at scale, with built-in support for load-balancing, automatic scaling, and self-healing. It abstracts the complexities of managing containers, enabling high availability and automated rollbacks. Kubernetes also helps manage sensitive information like OAuth tokens and SSH keys, ensuring secure, reliable operations across complex systems.

What types of applications can Kubernetes manage?

Kubernetes can manage both stateless and stateful applications, batch jobs, and machine-learning workflows. It’s particularly well-suited for distributed systems because it can automatically manage scaling, service discovery, and networking.

Examples of Scaleway Kubernetes use cases

High-availability web applications: Kubernetes' load-balancer ensures services can scale automatically while maintaining service continuity. It can also handle automatic DNS name resolution for seamless service discovery.

Data processing and machine learning: Kubernetes is ideal for scheduling long-running batch jobs and machine-learning models, distributing workload efficiently across nodes.

Multi-cloud deployment: Kubernetes' flexibility allows workloads to run across different public cloud providers or on-premise environments. Scaleway’s Kubernetes Kosmos provides a managed multi-cloud solution for easily deploying advanced configurations.

Is Kubernetes too complex for small teams?

Kubernetes can be complex but managed services like Scaleway Kubernetes Kapsule simplify setup. Using a managed service, Scaleway takes care of the underlying infrastructure, such as the Kubernetes API, and manages the control plane, making it easier for small teams to focus on their applications without worrying about the underlying infrastructure.

How does Kubernetes work vs Docker?

Kubernetes and Docker work hand-in-hand, but they serve different purposes. Docker is a container runtime that is responsible for creating and running containers. Kubernetes, on the other hand, is an orchestrator that manages these containers across a cluster of machines. While Docker manages individual containers, Kubernetes is responsible for deploying, scaling, and managing containerized applications across multiple hosts, providing self-healing and ensuring consistent performance.

You can find more info on the below topics in this article… or you can just carry on here!

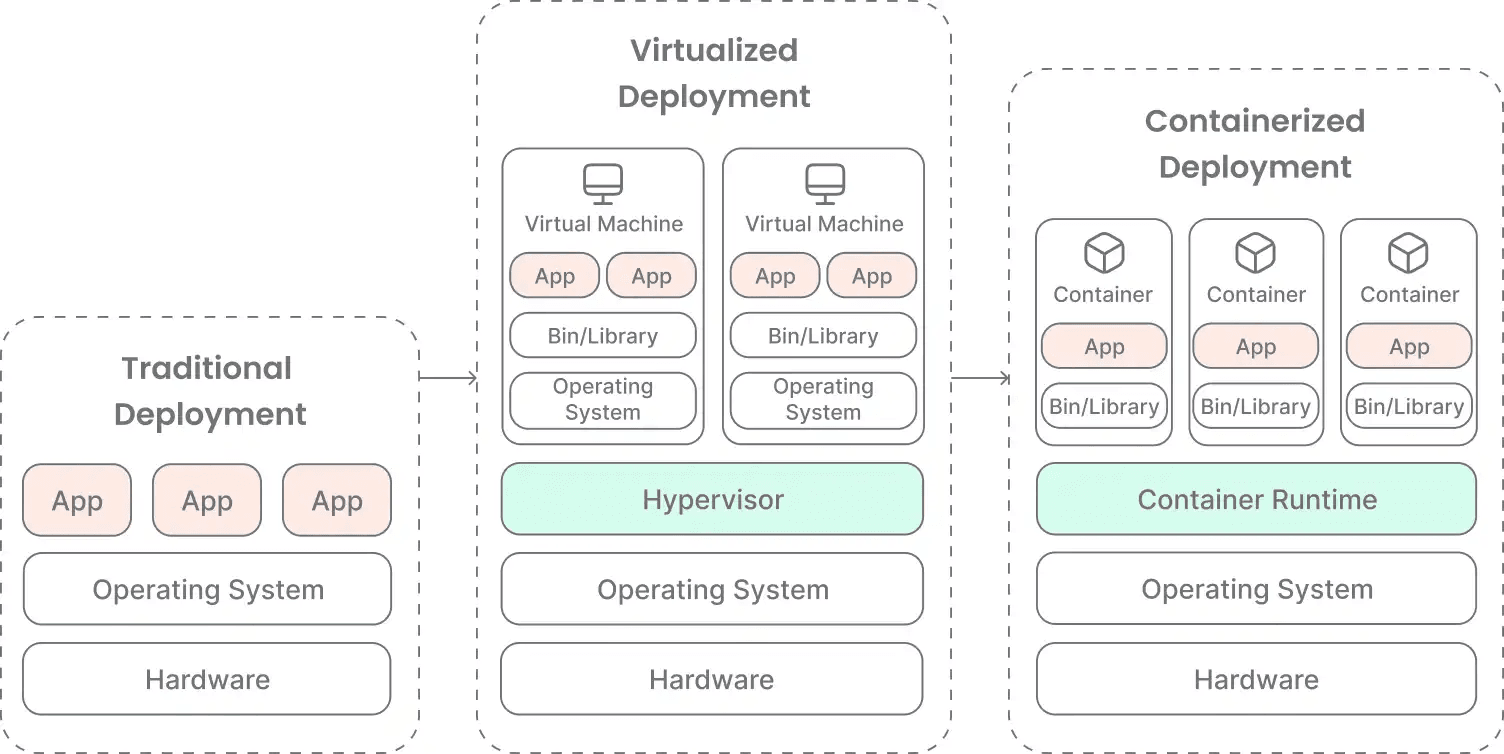

From traditional deployment to containerized deployment

To understand why Kubernetes and containerized deployment is so useful for nowadays workloads, let us go back in time and have a view on how deployment has evolved:

During the traditional deployment era, organizations ran applications directly on physical servers. There was no way to control the resources an application may consume, causing resource allocation issues. If an application consumed most of the server's resources, this high load might have caused performance issues on other applications running on the same physical server.

A solution would be to run each application on a dedicated server, which would cause resources to be under-used and maintenance costs to increase.

Multiple Virtual Machines (VMs) brought a beginning of solution during the virtualized deployment era. Virtualization allowed applications to be isolated between different VMs running on the same physical server, providing a security layer and better resource allocation.

As this solution reduces hardware costs, each VM still requires the same administration and maintenance tasks as a physical machine.

The containerized deployment era brought us the concept of containers. A container includes its running environment and all the required libraries for an application to run. Different containers with different needs can now run on the same VM or physical machine, sharing resources. Once configured, they are portable and can be easily run across different clouds and OS distributions, making software less and less dependent on hardware and reducing maintenance costs.

How Kubernetes can help you to manage containerized deployments

In a production environment, you may need to deal with huge amounts of containers, and you need to manage the containers running the applications to ensure there is no downtime.

Managing thousands of simultaneously running containers on a cluster of machines by hand sounds like an unpleasant task.

Kubernetes simplifies managing thousands of containers across a cluster of machines. With automatic scaling, load-balancing, and self-healing, Kubernetes ensures your applications run smoothly without manual intervention. It manages the lifecycle of containerized applications and services. It defines how applications should run and interact with other applications in the outside world while providing predictability, scalability, and high availability.

Kubernetes architecture

Kubernetes is able to manage a cluster of virtual or physical machines using a shared network to communicate between them. All Kubernetes components and workloads are configured on this cluster.

Each machine in a Kubernetes cluster has a given role within the Kubernetes ecosystem. The control plane (the "brain" of a Kubernetes cluster) manages the Kubernetes API, self-healing, and ensures that the desired state matches the actual state. The control plane performs health checks, schedules workloads, and adjusts network rules.

Each machine that runs containers is a node, requiring a container runtime such as Docker or containerd. Nodes use kubectl or the command-line interface to interact with the control plane and manage pods.

The different underlying components running in the cluster ensure that an application's desired state matches the actual state of the cluster. To ensure this, the control plane responds to any changes by performing necessary actions. These actions include creating or destroying containers on the nodes and adjusting network rules to route and forward traffic as directed by the control plane.

A user interacts with the control plane either directly with the API or with additional clients by submitting a declarative plan in JSON or YAML. The plan, containing instructions about what to create and how to manage it, is interpreted by the control plane, which decides how to deploy the application.

Kubernetes components

Control plane components

These main components form the cluster’s control plane. They make global decisions about the cluster and detect and respond to cluster events.

Multiple applications and processes are needed for a Kubernetes cluster to run. They can be components guaranteeing the cluster’s health and status or processes allowing communication and control over the cluster.

kube-apiserver

The kube-apiserver is a component on the control plane that exposes the Kubernetes API. It is the front end of the Kubernetes control plane and the primary means for a user to interact with a cluster. The API server is the only component that communicates directly with the etcd.

kube-scheduler

The kube-scheduler is a control plane component watching newly created pods that have no node assigned yet and assigns them a node to run on.

It assigns the node based on individual and collective resource requirements, hardware/software/policy constraints, etc.

etcd

etcd is a consistent and highly-available key-value store that is used by Kubernetes to store its configuration data, its state, and its metadata.

kube-controller-manager

The kube-controller-manager is a control plane component that runs controllers.

To reduce complexity, all controllers are compiled into a single binary and run in a single process.

cloud-controller-manager

The cloud-controller-manager is a control plane component that maps generic representations of resources to actual resources, provided by non-homogeneous cloud providers. It manages cloud-provider-specific features, abstracting public and private cloud providers.

Node components

Servers that perform workloads in Kubernetes (running containers) are called nodes. Nodes may be VMs or physical machines.

Node components maintain pods and provide the Kubernetes runtime environment. They run on every node in the cluster.

kubelet

The kubelet is an agent running on each node. It ensures that containers are running in a pod and that containers described in PodSpecs are running and healthy. The agent does not manage containers that were not created by Kubernetes.

kube-proxy

The kube-proxy is a network proxy running on each node in the cluster. It maintains the network rules on nodes to allow communication to the pods inside the cluster from internal or external connections.

Kube-proxy uses the operating system's packet filtering layer if it exists or forwards the traffic itself if it does not.

Container runtime

Kubernetes can manage containers but is not capable of running them. Therefore, a container runtime is required that is responsible for running containers. Kubernetes supports several container runtimes, such as Docker or containerd, as well as any implementation of the Kubernetes CRI (Container Runtime Interface).

Kubernetes objects

Kubernetes uses containers to deploy applications, but it also uses additional layers of abstraction to provide scaling, resiliency, and life cycle management features. These abstractions are represented by objects in the Kubernetes API.

Pods in Kubernetes

A pod is the smallest and simplest unit in the Kubernetes object model. Containers are not directly assigned to hosts in Kubernetes. Instead, one or multiple containers that are working closely together are bundled in a pod, sharing a unique network address, storage resources and information on how to govern the containers.

Services

A service is an abstraction that defines a logical group of pods that perform the same function and a policy on how to access them. The service provides a stable endpoint (IP address) and acts like a Load Balancer by redirecting requests to the different pods in the service. The service abstraction allows scaling out or replacing dead pods without changing an application's configuration.

By default, services are only available using internally routable IP addresses but can be exposed publicly. Kubernetes uses services to manage DNS names and direct traffic to different pods. Services also act as load balancers, redirecting requests, and scaling applications.

It can be done either by using the NodePort configuration, which works by opening a static port on each node’s external networking interface, or by using the LoadBalancer service, which creates an external Load Balancer at a cloud provider using Kubernetes load-balancer integration.

ReplicaSet

A ReplicaSet contains information about how many pods it can acquire, how many pods it shall maintain, and a pod template specifying the data of new pods to meet the number of replicas criteria. The task of a ReplicaSet is to create and delete pods as needed to reach the desired status.

Each pod within a ReplicaSet can be identified via the metadata.ownerReference field, allowing the ReplicaSet to know the state of each of them. It can then schedule tasks according to the state of the pods.

However, Deployments are a higher-level concept managing ReplicaSets and providing declarative updates to pods with several useful features. It is therefore recommended to use Deployments unless you require some specific customized orchestration.

If your application requires only a single instance running at any time, Deployments can be configured to maintain exactly one replica, ensuring high availability by replacing the pod if it fails.

Deployments

A Deployment is representing a set of identical pods with no individual identities, managed by a deployment controller. The deployment controller runs multiple replicas of an application as specified in a ReplicaSet. In case any pods fail or become unresponsive, the deployment controller replaces them until the actual state equals the desired state.

Ingress Controllers

Ingress Controllers are essential components in Kubernetes for managing external access to services within a cluster. While Services can expose pods internally or externally, Ingress Controllers provide advanced routing rules to manage HTTP and HTTPS traffic. They enable functionalities like SSL termination, name-based virtual hosting, and load balancing, allowing you to consolidate your routing rules into a single resource.

StatefulSets

A StatefulSet is able to manage pods like the deployment controller but maintains a sticky identity of each pod. Pods are created from the same base, but are not interchangeable.

The operating pattern of StatefulSet is the same as for any other Controllers. The StatefulSet controller maintains the desired state, defined in a StatefulSet object, by making the necessary update to go from the actual state of a cluster to the desired state.

The unique, number-based name of each pod in the StatefulSet persists, even if a pod is being moved to another node.

DaemonSets

Another type of pod controller is called DaemonSet. It ensures that all (or some) nodes run a copy of a pod. For most use cases, it does not matter where pods are running, but in some cases, it is required that a single pod runs on all nodes. This is useful for aggregating log files, collecting metrics, or running a network storage cluster.

Jobs and CronJobs

Jobs manage a task until it runs to completion. They can run multiple pods in parallel, and are useful for batch-orientated tasks. CronJobs in Kubernetes work like traditional cron jobs on Linux. They can be used to run tasks at a specific time or interval and may be useful for Jobs such as backups or cleanup tasks.

Volumes

A volume is a directory that is accessible to containers in a pod. Kubernetes uses its own volumes’ abstraction, allowing data to be shared by all containers and remain available until the pod is terminated.

A Kubernetes volume has an explicit lifetime - the same as the pod that encloses it. This means data in a pod will be destroyed when a pod ceases to exist. This also means volumes are not a good solution for storing persistent data.

Persistent volumes

Persistent volumes allow configuring storage systems for a cluster independent of the life cycle of a pod, avoiding the constraints of the volume life cycle being tied to the pod life cycle. Once a pod is terminated, the reclamation policy of the volume determines whether it is kept until it is deleted manually or terminated with the pod.

In Conclusion

In this blog post, you’ve gained an introductory understanding of Kubernetes, its core components, and how they work together.

While Kubernetes offers powerful capabilities, it can be complex to manage. Managed services like Scaleway’s Kubernetes Kapsule allow smaller teams to focus on developing and deploying applications, while Scaleway handles the underlying infrastructure.

With its extensive feature set, Kubernetes is a solution of choice for a broad range of projects.