Graduating from Docker to Kubernetes

Find out how to switch from Docker to Kubernetes for an easier way to create and manage your containers. One of Scaleway’s Solution Architects will walk you through the process.

You’ve heard of Kubernetes and Docker, two platforms that have changed the game when it comes to software deployment. But beyond the buzz, do you understand the difference between them, and how they can complement each other to provide an optimal platform for cloud-based applications? Read on to find out more.

First, let’s address the fundamental question: what is a container? A container is a portable package of software that includes its own environment and dependencies, in one handy, lightweight unit. With not only the application code itself, but also its runtime, configuration, and libraries all packaged together, a container can independently run on any host system. The pain of installing multiple dependencies before you can even run the application magically disappears.

Docker is an open-source platform for packaging applications into containers. It provides the “magic” to turn an application and its dependencies into a single container, or more precisely into the image from which a container can be launched.

Not only does Docker let you create container images, but it provides extra functionalities for managing the container lifecycle. There’s Docker Hub, a repository for storing images, *Docker Compose for managing multi-container applications, plus other features for the security, storage, and networking of containers.

Docker isn’t the only containerization platform that exists. More are out there, and alternatives to Docker include Podman and LXC. But Docker is the market leader, and it’s the platform we’ll be focusing on in this article.

If Docker is the go-to platform for creating and managing containers, then Kubernetes is the go-to platform for orchestrating them. Like Docker, Kubernetes is open source. Initially developed by Google, it is now managed and maintained by the Cloud Native Computing Foundation (CNCF).

But what do we mean by orchestrating a container, and why do we need a platform to do that? Keep reading to find out.

Running just one container on one machine is no big deal. But in a production environment, things often don’t look like that. We’re dealing with multiple containers needing to work together to perform different functions within an application. These containers aren’t running on a single machine, but across multiple virtual or physical machines, and we want to scale these resources as needed to deal with fluctuating demand. On top of this, we need to monitor the containers’ health and security to ensure the application is always running.

This is where container orchestration comes in. Container orchestration is the automated management of containers at scale, dealing with the deployment, scaling, and operation of containerized applications across clusters of machines.

If you’ve been following so far, you might have started to understand that Kubernetes and Docker serve two different purposes. Essentially, Docker lets you create and run containers, and Kubernetes orchestrates them at scale. The two platforms complement each other and are very frequently used together.

Let’s look in more detail at how Kubernetes and Docker are used and the purposes they serve. Since we’re talking about orchestration here, we can use that as a metaphor to better understand what’s going on.

Building a containerized image of your application with Docker is like writing the sheet music for a musical performance. This sheet music is the specification that defines what the piece should sound like and how it should be played - every time. Similarly, when you build a container image with Docker, you are creating a blueprint for the container that will be launched with this image.

As a developer, you create a container by first developing your application, then using Docker to containerize it. With Docker Engine, you get an application for just this, consisting of the Docker daemon service for creating and managing images and the Docker API and CLI to simplify interactions with said daemon.

You start by writing a Dockerfile, giving the instructions for building the image. The Dockerfile points to the application itself, along with specifications for everything the application needs to be able to run in a container. Then, use the docker build command to build the image from the Dockerfile, and voila, it’s created just like sheet music! We’re ready to play some tunes.

Let’s imagine we just want to play our sheet music ourselves, alone, in our room, as a solo. No problem - we can play a solo without any additional help, and we don’t need a conductor, let’s just get on with it.

Similarly, if we want to run our container image on our local machine to run an isolated instance of our containerized application - well, we can do that with Docker and we don’t need Kubernetes to orchestrate anything. Commands like docker run, docker stop, docker start and docker exec let us launch a container, control its lifecycle, interact with it, and view its logs. So, if we just want to play a solo - or run a simple instance of our containerized application, we can just use Docker.

What if we want to move beyond playing solos? What if now, we want to play with an orchestra? That’s where Kubernetes comes in.

If a large group of musicians tries to play together without a conductor, they’ll run into problems. Keeping time, changing tempo, and coordinating dynamics - all must be managed by a leader or conductor who coordinates all musicians to work together to create harmony. And in the case of multiple containers trying to work together to run a large-scale application, their conductor is Kubernetes.

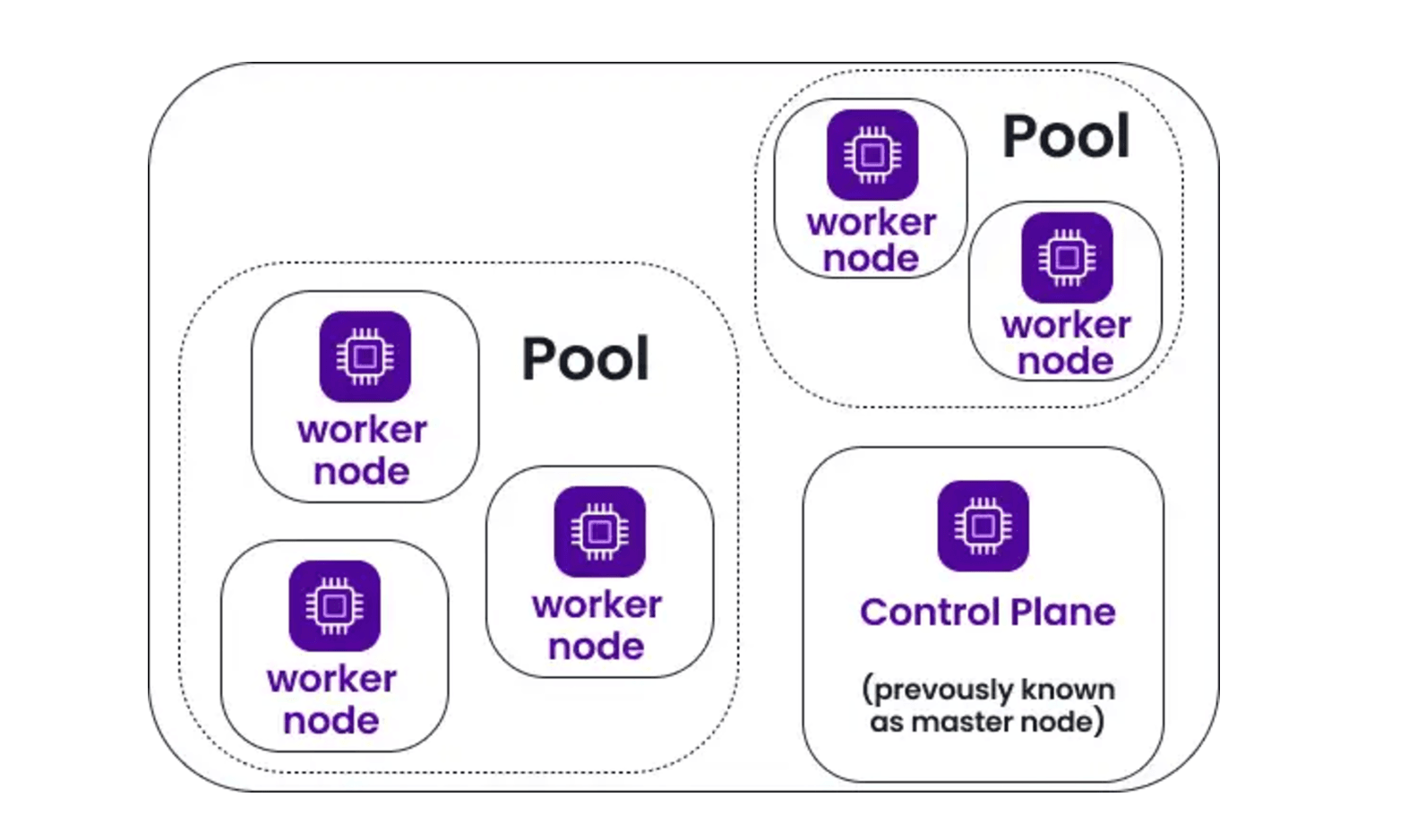

When we run Kubernetes, we are in fact running a Kubernetes cluster. This is a set of machines, called nodes, running containerized applications. A cluster has several worker nodes, each running pods, which are bundles of containers working closely together. Worker nodes are grouped into pools, where all nodes in the pool share the same configuration. Finally, the control plane (the brain of the cluster) manages all the worker nodes and their pods.

The control plane orchestrates all the containers and resources to ensure the cluster is always running and healthy.

Kubernetes manages the start-up, shut-down, and restarts of containers, the same way a conductor controls when musicians start playing, stop playing, or repeat a section

Kubernetes scales different parts of the application by adjusting the number of container replicas and associated resources based on demand, just as a conductor adjusts the dynamics of different instruments to be louder or quieter as needed.

Kubernetes monitors containers’ health, and if one fails, it automatically replaces it with a new one. Similarly, if a conductor sees that a musician is having trouble playing, they can signal for someone else to cover.

Docker is ideal for packaging containers, and beyond that for running isolated container instances in simple test environments. Docker Compose lets you manage multiple containers within a single host, and Docker Swarm provides some limited functionality for basic multi-host orchestration, but generally Docker is not well-suited to large scale production environments.

This is where you need to bring in Kubernetes. Use Kubernetes to manage the deployment, scaling, fault tolerance and failure recovery of containerized applications across clusters of multiple machines. While Docker offers some basic features for networking, scaling, storage and deployment, Kubernetes goes far beyond these simple capabilities, as well as offering additional rich possibilities for load balancing, self-healing, security, and monitoring.

This depends on where you’re at in the development cycle, and the scale of what you’re trying to achieve.

Kubernetes and Docker lend themselves perfectly to microservices architectures, where applications are structured as multiple independent services loosely coupled to work together. Docker lets you package each microservice as a separate container, and Kubernetes streamlines the deployment of these multiple containers at scale.

Imagine an e-commerce platform, where there are separate microservices for the product catalog, the client database and the payment system. These microservices communicate with each other asynchronously to exchange information as needed. Deploying this infrastructure in the cloud, where resources can be provisioned and scaled at will depending on load and demand, is an optimal solution.

Scaleway’s Kubernetes Kapsule lets you deploy a containerized infrastructure with ease. Providing a managed Kubernetes cluster with customizable resources and scaling capacities, we take the pain out of Kubernetes setup. Indeed, the time investment and complexity of getting everything working with Kubernetes is one of the main downsides of using the technology, but with Scaleway’s managed Kubernetes the complexity is vastly reduced. What’s more, our Kubernetes Kosmos lets you run your cluster across resources in multiple cloud providers, should you wish.

Once you’ve created your cluster, you can use the kubectl command line tool to interact with it and get your application up and running.

Other Scaleway products can also support your container management needs, such as Container Registry for storing and managing containers, Queues for facilitating asynchronous communication between microservices, and Load Balancer and Block Storage, which can be provisioned from within your Kubernetes cluster to facilitate network access and data storage.

So now we know: Docker and Kubernetes are at their best together, used at different stages of the containerization lifecycle. Create and test your container images with Docker, and then orchestrate them at scale with Kubernetes, and you’ll unlock the full power of stable, reliable, scalable, self-healing cloud-based applications.

Find out how to switch from Docker to Kubernetes for an easier way to create and manage your containers. One of Scaleway’s Solution Architects will walk you through the process.

For nearly 40% of DevOps and engineers, Kubernetes security isn't taken seriously enough. So what steps can be taken to protect your clusters? Here are some useful tips...

To understand why Kubernetes and containerized deployment is so useful for nowadays workloads, let us go back in time and have a view on how deployment has evolved