ReRAM Revival

ReRAM or Resistive Random-Access Memory (RRAM) is a new kind of computer memory that would allow for non-volatile RAM with size in the order of Solid State Drives (SSD) to be more energy efficient and faster. According to EU Horizon 2020 REMINDER project report, such memory can run 1000x faster, while using 1000x less energy per byte read than current drives technologies such as NAND Flash.

Today we will have a look at ReRAM history, how it works, why it almost came into existence a decade ago and why it’s coming back thanks to the AI boom.

Memory hierarchy

So far, most computers have relied on the Von-Neumann architecture where there is a split between RAM and storage. RAM is faster, smaller, closer to the CPU and volatile; storage on the other hand is an order of magnitude slower, but much bigger, can be accessed externally and non-volatile. For more details about storage vs. RAM see this nice post by Backblaze.

| Type | Size | Access time (CPU cycles) |

|---|---|---|

| CPU register | 4 - 64B | 0 |

| L1 cache | 16 - 64KB | 1 - 4 |

| L2 cache | 128KB - 8MB | 5 - 20 |

| L3 cache | 1 - 32MB | 40 - 100 |

| DRAM | Tens of GBs | 100 - 400 |

| SSD | Hundreds of GBs | 10,000 - 1,000,000 |

| HDD | Terabytes | 10,000,000+ |

Typical size and access time for the different types of memory. (source: https://homepages.cwi.nl/~boncz/msc/2018-BoudewijnBraams.pdf)

Those starkly different characteristics means that their usage requires totally different strategies and accordingly, operating systems and software have been built to work around this gap.

This is a fundamental tenet of how computers work and this hasn't been challenged in decades.

Let's consider two cases:

- Say you need to search for a bit of data in a large file that is too big to fit in RAM. Then the operating system would have to fetch blocks of the file from storage and put them the memory so that the software can look through it. The OS sequentially fetches data and discards it from memory once it's no longer needed.

- On the other hand, if the file is small enough to fit inside the memory, the software can look through it at once. But the back and forth between 1. reading the storage, 2. putting the content in RAM, and 3. discarding the no longer required data drastically reduces the speed of this simple operation.

To reduce the impact on performance, operating systems and software like database servers have been optimized to work around this. For example Oracle DB offered the possibility to use a raw block device to bypass the file system and do their own optimization for precise data layout on the disk.

Memristors

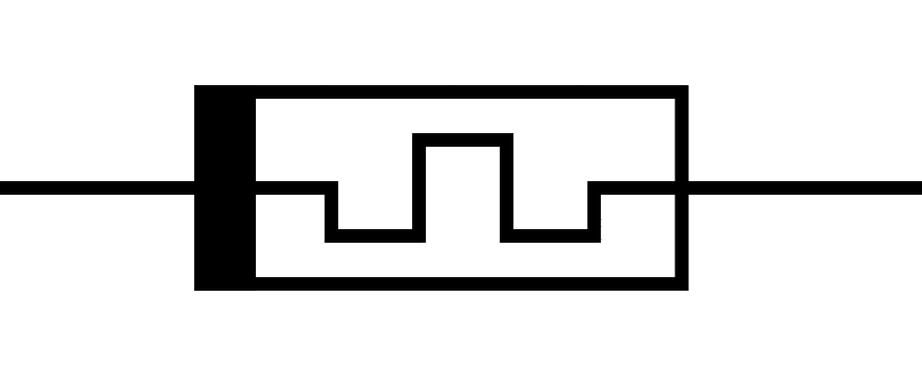

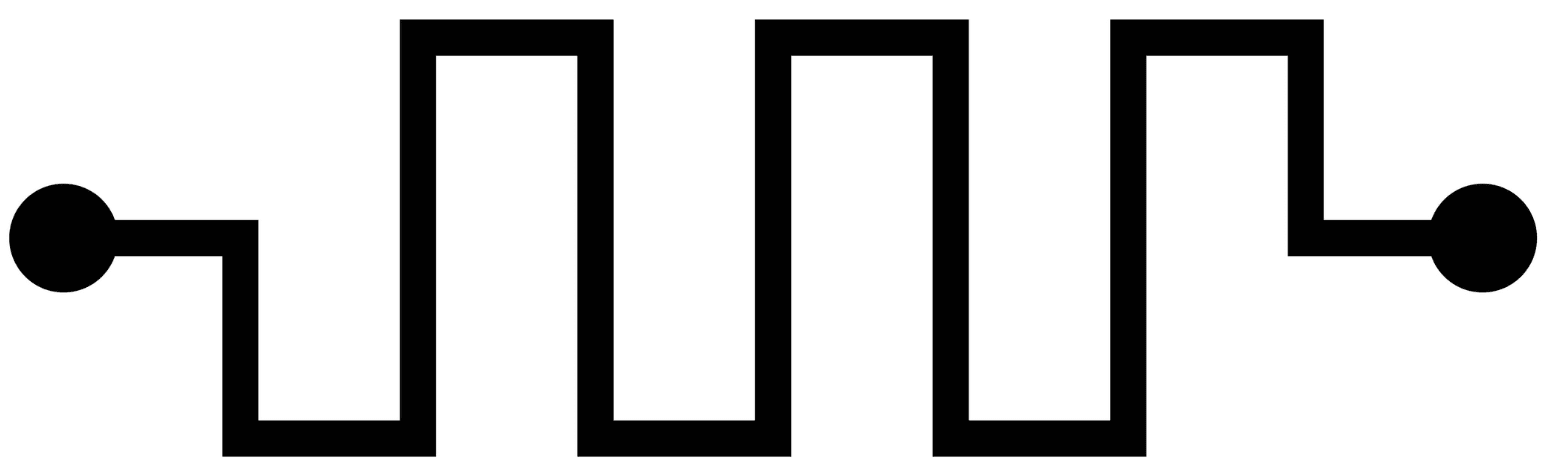

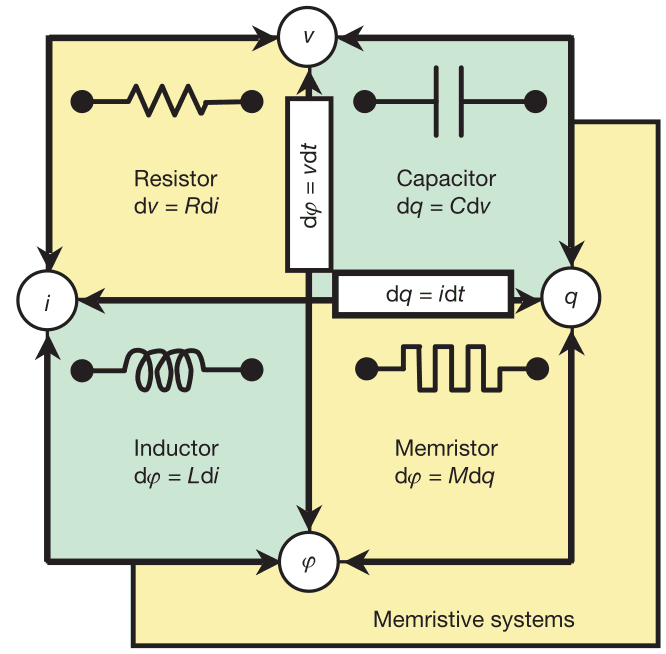

Memristors were considered to be the missing fundamental two-terminal passive electrical component. While the 3 well-known fundamental components (resistor, inductor and capacitor) have constant relationships between the physic values (current, voltage, magnetic flux…), the so-called memristor displays a memory effect: its resistance depends on the amount of charge that has already passed through it.

| Memristor Symbol - With polarity | Memristor Symbol - Non-polarized |

|---|---|

|  |

That property of having the resistance change according to the current that has passed through makes memristor an ideal building block for making non-volatile random access memory (NVRAM). Typically for this use case, two resistance states are used: Low Resistance State (LRS) and High Resistance State (HRS), each coding for logic 0 and logic 1.

Usual RAM technologies require that power is applied to retain information. Power shall be supplied either permanently for Static RAM (SRAM), or periodically to refresh the content in Dynamic RAM (DRAM). Removing this power requirement for data retention makes the memory content persistent (non-volatile) and also reduces the energy consumption. Also, this technology makes the required circuitry smaller, thanks to the simpler design of the memory cells, leading to 10x higher density compared to current technologies.

The Memristor - CLICK to discover the 4th electronic component

In electronic, there 3 fundamental linear passives two-terminal components:

- Resistors relate voltage and current. The voltage across the conductor is proportional to the current passing through, with a constant of proportionality R called the resistance (

voltage = resistance x current). A resistor dissipates energy in the form of heat. - Capacitors relate charge and voltage. The voltage across the terminals is proportional to the charge stored in the capacitor, through the inverse of the capacitance C (

charge = capacitance x voltage). Also, in a capacitor the instantaneous current is equal to the rate of change of the charge (current = variation of charge / variation of time). An ideal capacitor is a simple lossless charge accumulator, and thus dissipates no power. - Inductors relate magnetic flux to current. The current through the inductor generates a magnetic field whose flux is proportional to the current and the inductance L (

flux = current x inductance). An ideal inductor is lossless and dissipates no energy.

The theoretical component that could relate charge to magnetic flux was called a “memristor”, named for the first time in September 1971 by Leon O. Chua in his paper Memristor - The Missing Circuit Element. The name is a mix of the words memory and resistor because a memristor would have the special property of having its resistance change depending on the current (i.e. variation of charge) that previously passed through.

It is worth noting that in contrast to the resistor, capacitor or inductor there is no single relationship for a memristor. More precisely the resistance (R), capacitance (C) and inductance (L) are constants, but the memristance (M) is variable and depends on the charge. If M is constant, it can be shown that the memristor simplifies to that of a resistor.

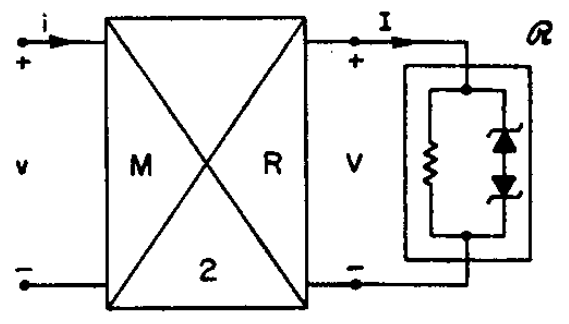

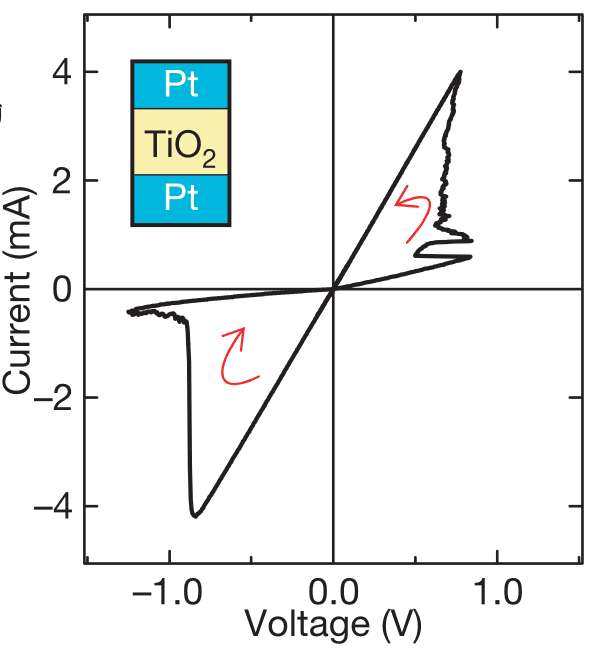

When it was theorized, there was no practical implementation of such a passive component. In its paper, Chua proposed active implementations (electronic circuits that require external power), but the first passive implementation of a memristor was only proposed in 2008 by a research team at HP Laboratories. More precisely they showed how results from past experiments showing hysteresis (thus memory) behavior can be used in the field of memristor.

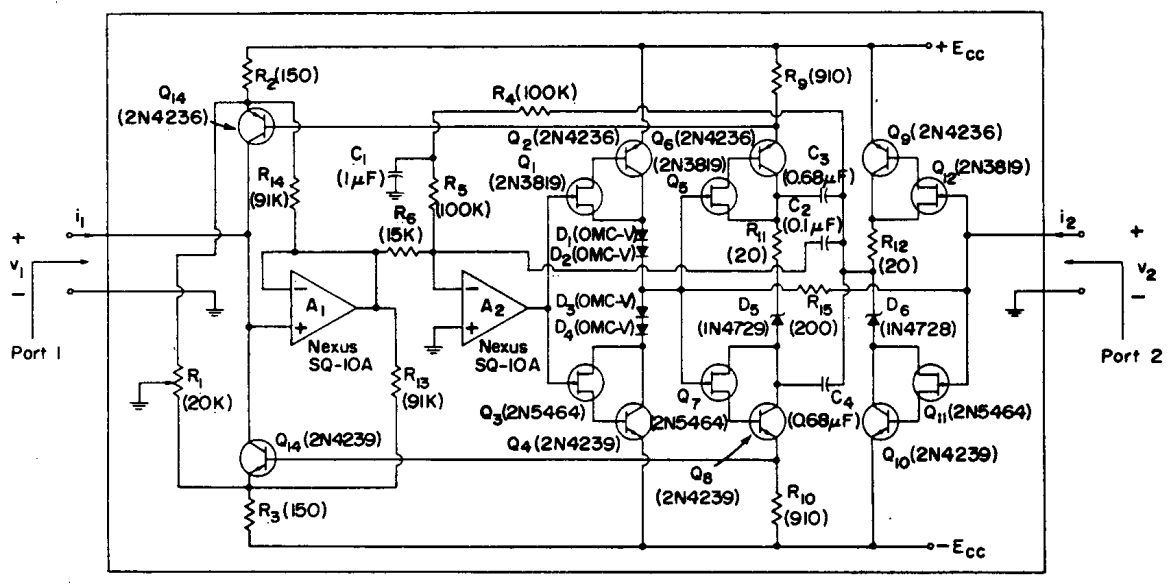

Where an implementation of the “M-R” box - as proposed in the initial paper - is shown below.

Hewlett-Packard's 'The Machine'

After developing the first working memristor in 2008, in 2014, HP Labs, HP’s research groups, announced the launch of the project codenamed "The Machine". A big undertaking to create a computer that upends this paradigm, by using a new type of memory, based on memristors, that combines the advantages of RAM and disks: it is faster, bigger and persistent. Having RAM the size of the current local storage capabilities and the storage with the latency and bandwidth of RAM meant that an unified memory architecture could be developed.

In such a paradigm, a database that previously would require a lot of data reads from disk and the latency penalty it incurs could now directly access the whole content from memory. Making them more optimized for the processing of huge amounts of data.

Unfortunately, “The Machine” didn't take off. A combination of HP restructuring, with HP being split into two separate companies and the difficulty in having a good yield for the ReRAM chips led to the abandonment of the project.

AI applications

Although memristor-based computers have not happened so far, a new opportunity for ReRAM has appeared: Machine Learning. The size of machine learning models is steadily growing and having a lot of data closer to the CPU could make inference faster and more energy efficient.

The current Von Neumann architecture is not always well-suited for AI computing, the split between RAM and storage introduces a performance bottleneck. Not having to move data from storage to RAM for every operation is costly, in terms of time and energy and even with the most powerful GPU you can have, it’ll spend a lot of time waiting for data.

ReRAM offers two answers for that issue:

- As described above, ReRAM could replace or complement existing RAM in AI clusters to provide more data closer to where the AI computation occurs.

- On top of that, a new avenue of optimization is possible with ReRAM: in-memory computing.

AI models inference (when the model is used to generate an output in response to a user prompt) involves a lot of matrix-matrix or matrix-vector multiply-accumulate operations. From the multilayer perceptron, to attention heads found in transformers (which are at the heart of most Large Language Models, like GPT or LlaMa), or even State Space Models (SSM) found in recent Mamba architectures, all neural networks implement linear relationships between values (though non-linearities like activation layers are added in-between linear layers). And matrix-matrix multiplication is the mathematical transcription of these linear relationships.

The property of having the resistance of a memristor changing depending on another variable (past current) means that a set of memristors tied with a crossbar could encode the weight of a neural network by itself, whilst also performing the required computation. In which case data wouldn’t have to leave the memory to be processed leading to superior inference speed.

Matrix-matrix multiplications using memristors - CLICK to discover the magic behind

Matrix-vector multiplication can be implemented using crossbar arrays of memristors as shown in the figure below.

Recall from linear algebra that:

- The matrix-vector multiplication consists in computing Y = A × X, where A is a matrix, X is a vector and thus Y is a vector. Each coordinate of Y is a sum of the coordinates of X weighted by the coefficients of A.

- For example, if X is a two-dimension vector, and A is a 2x2 matrix, then the first coordinate of Y noted y₁ = a₁₁ × x₁ + a₁₂ × x₂, where x₁ and x₂ are the coordinates of X, and a₁₁ and a₁₂ the coefficients of the first line of the matrix A.

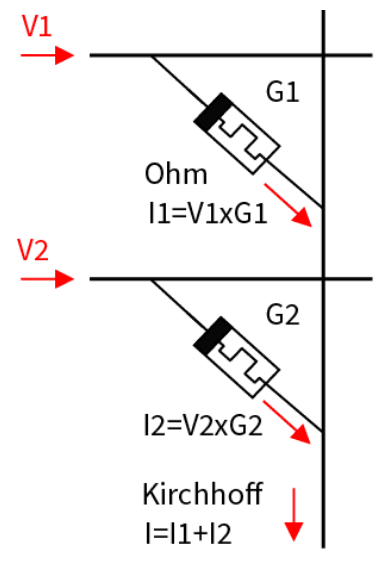

Also recall from electronics fundamentals that:

- The current through a memristor is equal to the voltage across its terminal times its conductance (called memductance for memristors), which is the inverse of the memristance (

current = memductance x voltage<=>voltage = memristance x current). - The total current in a wire is the sum of all currents entering that wire (think of it as multiple streams converging into a big river).

So, a practical analog implementation can be performed as follows:

Since,

- (a) the current through the memristor is equal to the voltage across its terminal times its conductance (Iₖ = Gₖ × Vₖ);

- (b) the total current is the sum of all currents (I = I₁ + I₂).

If,

- (c) the weights of the neural networks are encoded in the memristor’s conductance (G₁, G₂);

- (d) the tensor values are coded by the voltage applied to each memristor (V₁, V₂).

Then,

- the total current I is the result of the desired matrix-vector multiplication.

- I = I₁ + I₂ = V₁ × G₁ + V₂ × G₂.

Also, memristors can be used directly to build neuromorphic circuits, thus allowing for analog neural networks.

Because of those advantages, ReRAM research is currently experiencing a revival, with upcoming hardware for AI clusters where the data density allows for large models but also in smaller “edge” and “in-devices” AI accelerators where the energy savings of in-memory computing are advantageous. High Performance Computing (HPC) may also benefit from this emerging technology since - like with AI - linear systems are heavily used in HPC.

This shows in the current panel of companies working on ReRAM, from established players like HP, Samsung, Micron, SK Hynix, Rambus but also newer companies like Crossbar, 4DS Memory or WeebitNano. They offer very different product ranges from ultra low power embedded systems with radiation hardening to high-density enterprise storage solutions, but also AI inference acceleration.