Terraform: how to init your infrastructure

If you want to quickly and easily set up a cloud infrastructure, one of the best ways to do it is to create a Terraform repository. Learn the basics to start your infrastructure on Terraform.

As your business grows, the network throughput of your infrastructure increases, and your servers’ response and processing time increases too, so providing optimal services for your clients/users becomes more and more challenging. This means the time has come for you to consider using a load balancer. This is a key product for any company wishing to scale and create the best user experience possible. In this article, you will discover how a load balancer actually works, how you can use it, and how your cloud infrastructure could benefit from it.

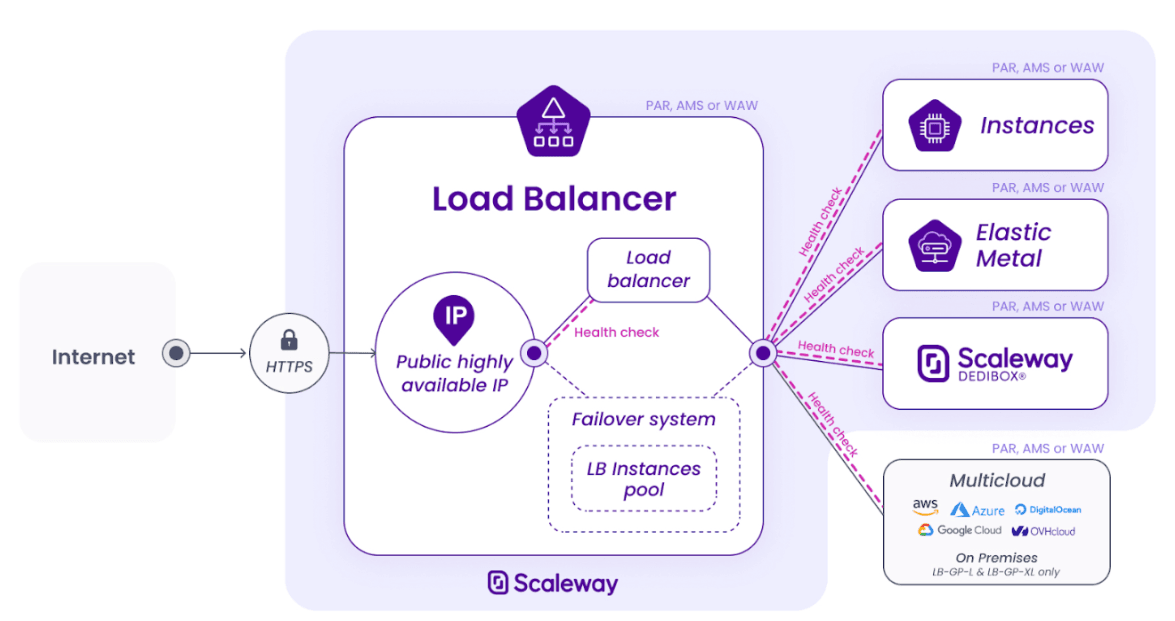

A load balancer is a network solution that distributes the network load across one or more servers, meaning it takes over a server’s network requests and directs the network packets towards a server according to one or more preset rules.

Therefore, several servers can be hosted under the same IP address, which makes it possible to pool resources. A load balancer can be hosted either in the cloud, or on-premise in a private data server. It directs traffic towards IP-carrying components, which can be Instances, Bare Metal or dedicated servers.

A load balancer acts as an entry point to infrastructure: users call on an application’s/website’s URL, which is directed towards the load balancer’s IP address, then the request transparently reaches the destination server.

Following certain preset rules, the load balancer then determines how to process the request. There are several possible rules:

The main use for a load balancer is of course implementing rules to assign workloads, but this service offers many other features, which we will take a look at below.

A load balancer is a valuable tool, and has many other features to help improve security, resilience, or network performance.

You can check whether backends are operational and providing the requested service by using a load balancer. To do so, the load balancer sends a request to the backend server, which should respond. If it doesn’t, the load balancer labels the server as failed. The developer can then decide what action to take.

Example of API request:

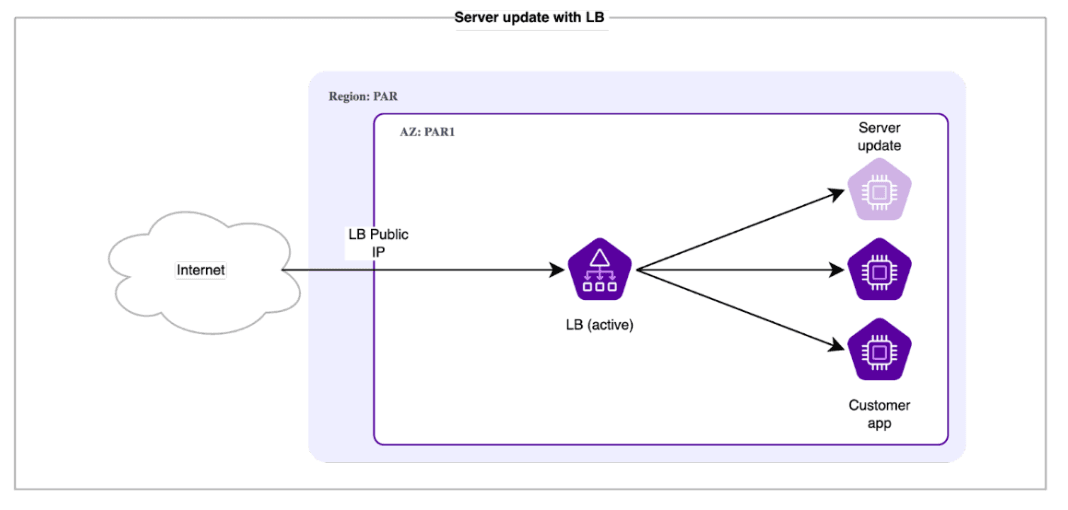

---GET /lb/v1/regions/{region}/lbs/{lb_id}/backend-statset sa réponse type :{"backend_servers_stats": [{"instance_id": "string","backend_id": "string","ip": "string","server_state": "stopped",starting or running or stopping."server_state_changed_at": "string","last_health_check_status": "unknown"or;neutral or failed or passed or condpass. }],"total count": 42As we mentioned earlier, a load balancer allows you to distribute your traffic across several servers offering the same service. Indeed, when a server in the backend of a Load Balancer doesn’t respond, the Load Balancer defines another to take over. Such resilience makes it possible to purposefully unplug a server without interrupting the service for users. It means you can perform software or security updates, upsize or downsize an Instance, or update part of the infrastructure without impacting the overall uptime of the service. With a load balancer, you can decide when to perform your updates.

Your application or website is receiving many visitors. Now that you’ve reached the production phase, and your business is going well, you are receiving a lot of connection requests. Some of them are coming from your clients and are legitimate, but others you’d gladly do without, such as scraping bots, foreign competitors, or even intruders.

To avoid this, you can set up ACL (Access Control List) rules to ban certain IPs or only authorize certain connections, in order to improve your security.

{ "name": "string", "action": { "type": "deny" }, "match": { "ip_subnet": [192.168.1.1 ], "http_filter": "acl_http_filter_none", "http_filter_value": [ "string" ], "http_filter_option": "string", "invert": "boolean" }, "index": 42As your infrastructure’s main entry point, a load balancer can carry the TLS/SSL encryption to secure the connection between the user’s browser, the website, and the backend server.

This protects the privacy and security of the requests between the user and the load balancer.

There are several ways to manage a TLS/SSL certificate with a load balancer, such as:

In this case, the load balancer doesn’t decrypt the connection, but transfers it directly to the server. The certificate will cause a slight network packet overload, but it is the simplest way to manage HTTPS. It’s worth noting that SSL passthrough doesn’t allow Layer 7-type actions, such as carrying cookies or activating a sticky session

Offloading means that the load balancer validates the certificate on the inbound request and transfers it to the server without decrypting it. The advantages of this are improved network performance and faster request processing

With the bridge, it is possible to validate a certificate upon receiving the request, then redirect the traffic by encrypting a new certificate to allow the connection between the backend and the load balancer. This technique maximizes request and network security between different components

Because the load balancer is the only access point to the other Instances, it becomes the "single point of failure" of the architecture. Therefore, should the load balancer fail, the infrastructure becomes unavailable. For this reason, it is important for a load balancer to have a redundant, high-availability, architecture.

Some providers offer a load balancer paired with a second Instance, which can take over whenever needed. Others propose regionalized load balancers, meaning that they are supported by one of the three AZ’s in a providers’ region.

To ensure high availability, you can also prevent the total failure of all redundancy measures, by redirecting the traffic to a static website hosted on our Object Storage solution for example. so that your users don’t end up with a 404 error.

All of these features allow you to benefit from the main advantages of using a load balancer: increased resilience and redundancy with backends and Health Checks, improved network performance with certificate management, or bring security to the next level by setting up access rules.

Any company wishing to build a future-proof and scalable business in the cloud needs to start using a load balancer in order to protect their infrastructure as it grows and to make the most of what the cloud has to offer.

As a multi-cloud service provider, Scaleway offers a Load Balancer as part of its portfolio. It is hosted on a high-availability infrastructure and offers up to 4Gbp/s of bandwidth as well as unlimited backends to help you scale your infrastructure seamlessly.

Scaleway allows you to open connections with backends from external infrastructure in order to help startups and businesses build a multi-cloud architecture.

Log in directly to the Scaleway console to start using Load Balancer today.

If you want to quickly and easily set up a cloud infrastructure, one of the best ways to do it is to create a Terraform repository. Learn the basics to start your infrastructure on Terraform.

This article provides a curated list of great open-source projects to help you build your startup and deal with tooling, design, infrastructure, project management, cybersecurity, and more.

Using Kubernetes in a Multi-Cloud environment can be challenging and requires the implementation of best practices. Learn a few good practices to implement a concrete multi-cloud strategy.