Cloud Solutions for AI

Discover European sovereign cloud services to scale your artificial intelligence projects from A to Z.

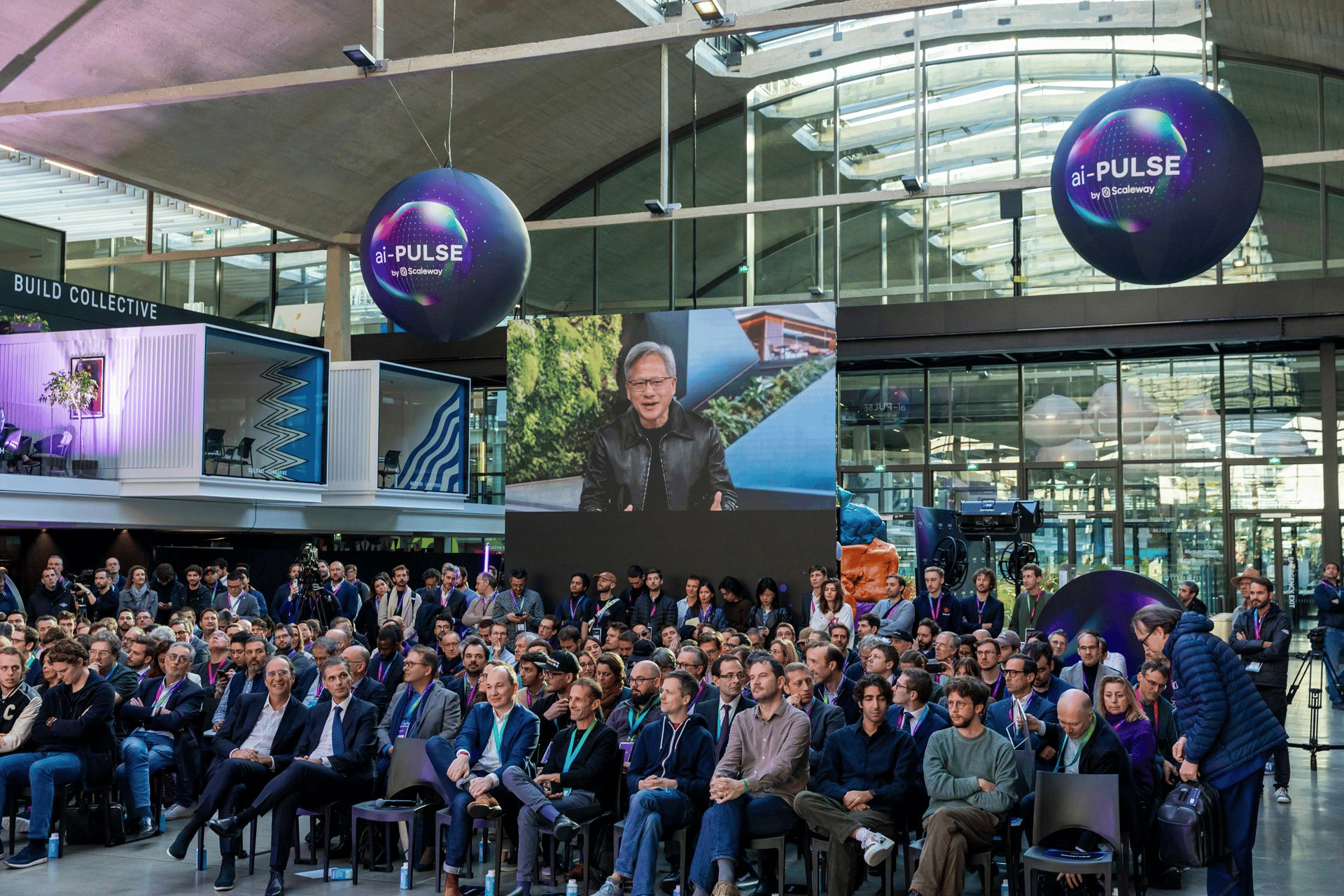

ai-PULSE 2024: The 2nd annual event for AI innovators and tech leaders

Pre-register now!

Pre-register now!Exploring AI solutions that go beyond the hype? ai-PULSE 2024 is the event that will reshape how we think about AI, focusing on real-world impact and innovation. Here’s what you’ll dive into:

- Big Data & Big Models

- Light Models & Sustainable AI

- Open Source & AI Sovereignty

Scaleway isn’t just keeping up with AI trends—we’re defining them. Pre-register now and be part of the change!

Build high performing, AI-enabled solutions for your business

Unlocking the potential of Artificial Intelligence and securing your place as a pioneer in your industry requires you to build an application that meets your challenges and ensures the integrated use of AI, tailored to the challenge of your specific business.

This requires data engineers, data scientists, Machine Learning engineers, data governance managers and business stakeholders to work hand in hand to complete the Machine Learning lifecycle and ensure the right data lifecycle.

Prepare your data environment

Transfer your data with Block Storage

A flexible and reliable storage solution for demanding workloads.

Gather your data in Object Storage

Stores large amounts of unstructured data and allows you to distribute it instantly.

Build managed Databases

Managed PostgreSQL and MySQL databases can scale up to 10TB in seconds!

Create, train & fine-tune your model

Launch a dedicated AI Supercomputer

Powered by NVIDIA DGX H100 systems, the AI supercomputers deliver unmatched speed for AI training, equipped with cutting-edge technology, including NVIDIA H100 Tensor Core GPUs, NVLink, and Quantum-2 InfiniBand network.

- Nabu 2023, perfect for training LLMs and building the next generative AI models, with its 1,016 NVIDIA H100 Tensor Core GPUs

- Jero 2023, designed to train less complex models, with its 16 NVIDIA H100 Tensor Core GPUs

Leverage the right-sized GPU instance

To answer your needs and respect your budget at the same we offer you a complete and diversified GPU-portfolio:

- H100 PCIe GPU Instance for up to 1513 TFLOPs (FP16)

- L40S GPU Instance for up to 362 TFLOPS (FP16)

- L4 GPU Instances for up to 242 TFLOPS (FP16)

- Render GPU Instance for up to 19 TFLOPS (FP16)

Scale your infrastructure with Containers

Manage your infrastructure according to your needs, with Kubernetes and Containers solutions, fully integrated into our ecosystem.

Deploy and manage containers quicker thanks to transferable data and backups stored in persistent Block Storage volumes.

Deploy AI-enabled solutions into production

Managed Inference

Public Beta on July 2024

Benefit from a secured European cloud ecosystem for serving curated LLMs with a dedicated GPU-infrastructure payed by hour.

Generative APIs

Beta in 2024

Get an alternative to Open AI with pre-configured serverless endpoints of the most popular AI models, hosted in European data centers, priced per 1M tokens used.

Leaders of the AI industry are using Scaleway

Mistral AI

"We're currently working on Scaleway SuperPod, which is performing exceptionally well.", Arthur Mensch on the Master Stage at ai-PULSE 2023 talked about how Mistral 7B is available on large hyperscalers like Scaleway and how businesses are using it to replace their current APIs

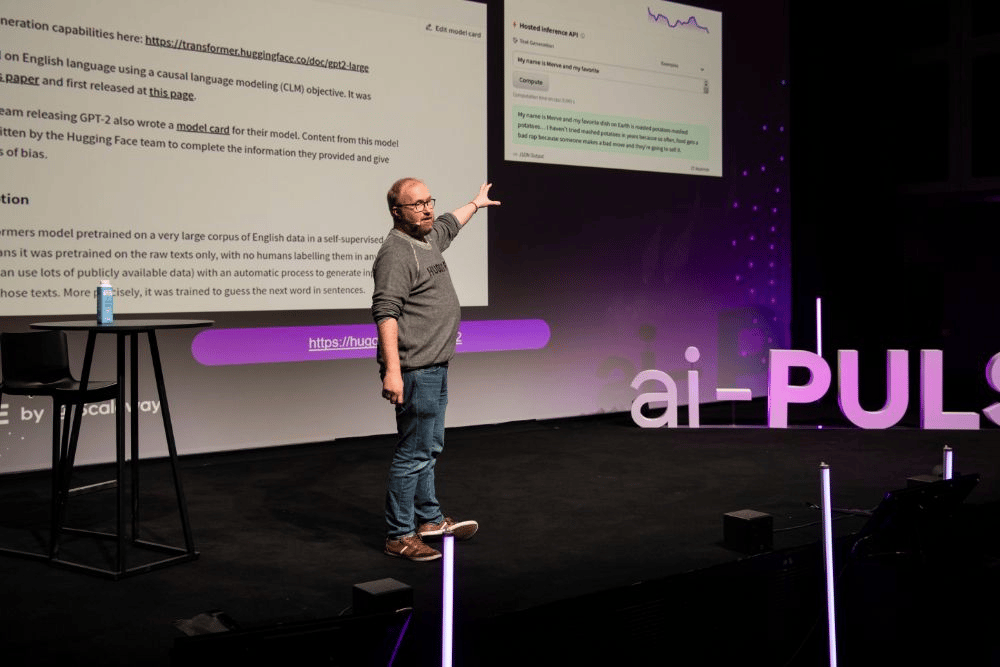

Hugging Face

“We've benchmarked a bit of Nabu 2023, Scaleway AI Supercomputer and achieved very appropriate results compared to other CSPs. Results that could be greatly improved with a bit more tuning! We can't wait to benchmark the full 127 DGX nodes and see what performance we achieve” Guillaume Salou, ML Infra Lead at Hugging Face

Why Scaleway?

Minimize your carbon footprint

Scaleway’s GPU products are initially available in DC5 (par2). With a PUE (Power User Effectiveness) of 1.15 (average is usually 1.6) this datacenter saves between 30% and 50% electricity compared to a conventional data center.

Keep sensitive data in Europe

As a leading European cloud service provider, Scaleway stores all its data in Europe, which means it is not subject to any extraterritorial legislation,

and fully compliant with the principles of the GDPR.

Data sovereignty, transparency and traceability are guaranteed when using our products.

Benefit from a complete Cloud Ecosystem

Scaleway offers a complete cloud ecosystem, spanning from robust IaaS products to adaptable storage solutions and comprehensive managed services. This versatility enables you to build a resilient and dynamic infrastructure that not only supports your entire Machine Learning lifecycle but also caters to a diverse range of developer needs.