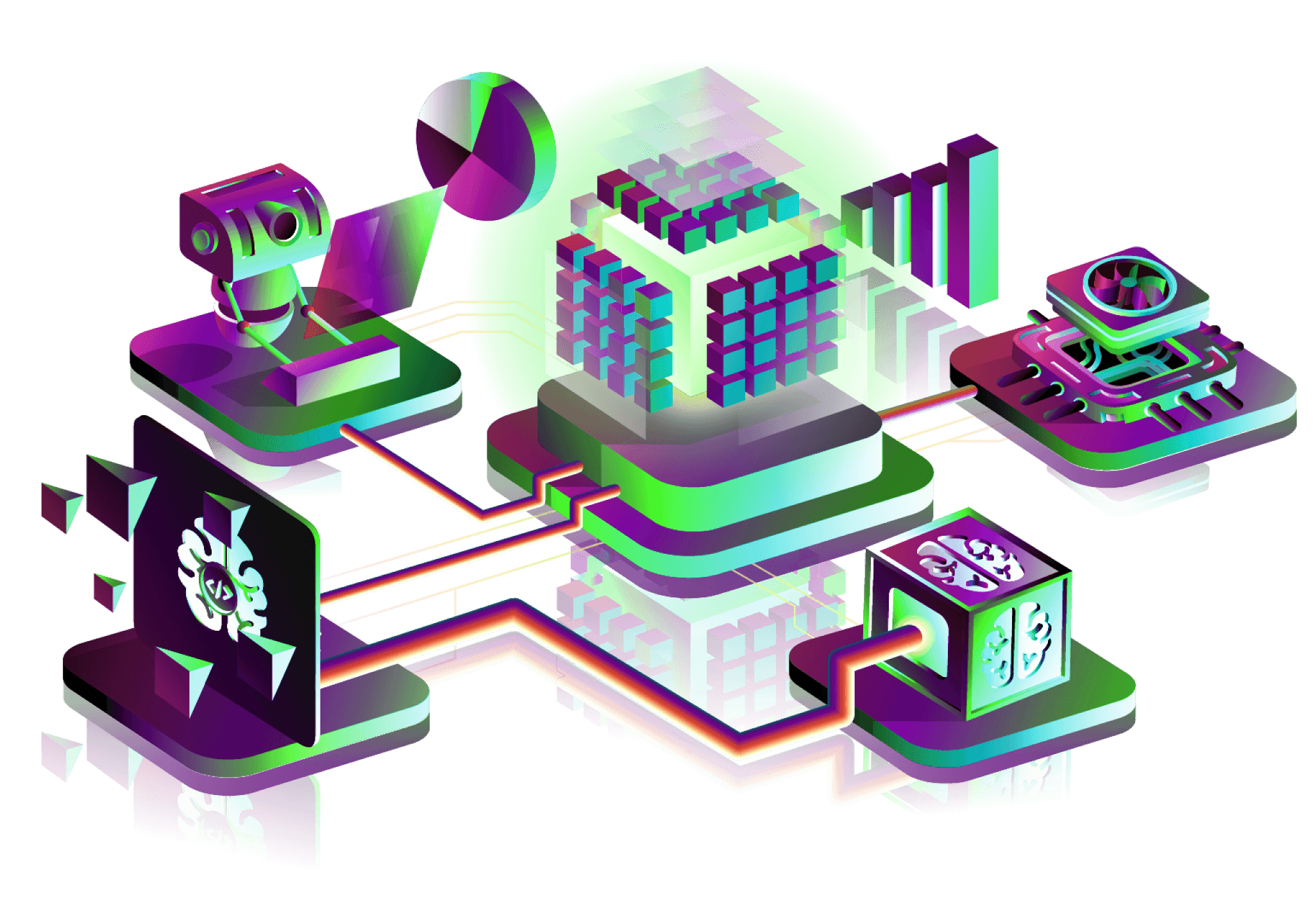

Current voice dialogue systems rely on chains of independent components (voice activity detection, speech recognition, text processing, and voice synthesis). This results in several seconds of latency and the loss of non-linguistic information, such as emotions or non-verbal sounds. Additionally, these systems segment dialogues into turn-based interactions, overlooking interruptions or overlapping speech.

Kyutai's approach with Moshi aims to solve these issues by directly generating speech (both audio and text) from the user's voice, without relying on intermediate text.

The user's and the AI's voices are modeled separately, allowing for more natural and dynamic dialogues. The model predicts text first, before generating sounds, enhancing linguistic quality while enabling real-time speech recognition and synthesis. With a theoretical latency of 160ms, Moshi is the first real-time, full-duplex voice language model.