Setting up SSL bridging, offloading or passthrough

In this document, we explain some of the different configurations available when setting up your Load Balancer, in terms of how the Load Balancer should deal with encrypted traffic. The three main configurations are:

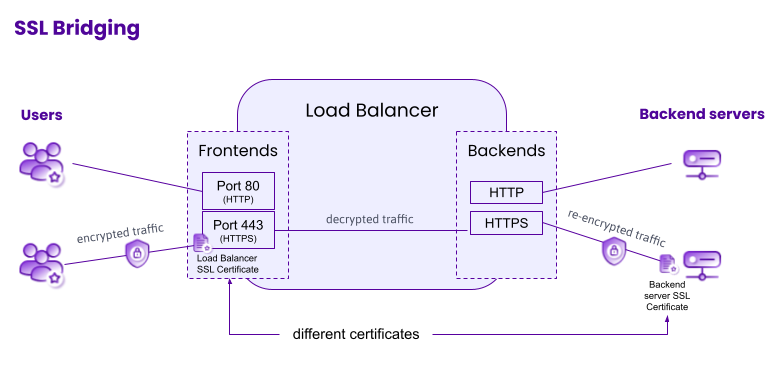

- SSL bridging: The Load Balancer decrypts incoming HTTPS traffic, and re-encrypts it when sending to the backend server.

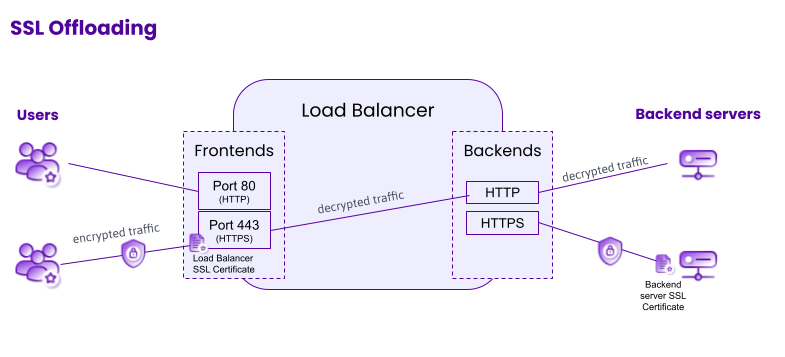

- SSL offloading (aka SSL termination): The Load Balancer decrypts incoming HTTPS traffic, and sends it to the backend server unencrypted.

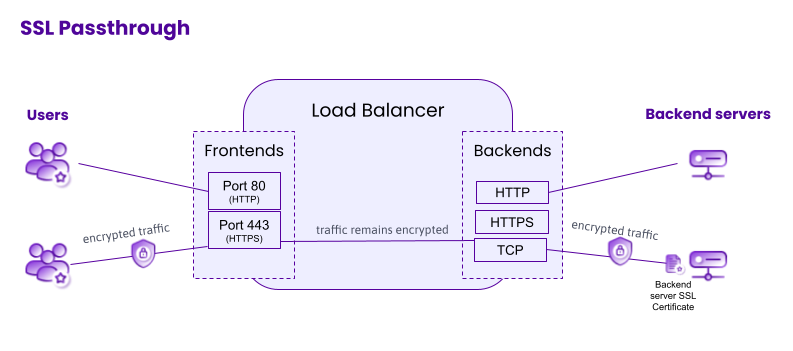

- SSL passthrough: The Load Balancer does not decrypt incoming HTTPS traffic, and sends it to the backend server 'as is'.

Read on to find out how to configure your Scaleway Load Balancer for any of these modes.

Configuring a Load Balancer for SSL bridging

SSL bridging allows the user to initiate a secure, encrypted connection with the Load Balancer thanks to the Load Balancer frontend's SSL certificate. The Load Balancer decrypts incoming HTTPS traffic. This allows the Load Balancer to carry out layer 7 actions on the received traffic. The Load Balancer's backend then initiates a new encrypted connection to re-encrypt traffic between the Load Balancer and the backend server, this time using the backend server's certificate.

To configure your Load Balancer for SSL bridging:

- The frontend must have a certificate.

- The frontend must be linked to a backend which has TLS activated.

- The backend server should have its own certificate.

Configuring a Load Balancer for SSL offloading

SSL offloading, also known as SSL termination, allows the user to initiate a secure connection with the Load Balancer thanks to the Load Balancer frontend's SSL certificate. The Load Balancer decrypts incoming HTTPS traffic. Layer 7 actions may therefore be applied to the traffic at this stage. Traffic is not re-encrypted on its way from the Load Balancer to the backend server, unlike with SSL bridging. Traffic that has gone through the offloading process is marked with a new header, called X-Forwarded-Proto, which informs the backend server that the client used HTTPS to contact the Load Balancer.

To configure your Load Balancer for SSL offloading:

- The frontend must have a certificate.

- The frontend must be linked to a backend which uses HTTP protocol.

- The backend server does not need its own certificate.

If you want to configure your Load Balancer for SSL offloading using the API, see our dedicated guide. If you have a Kubernetes Load Balancer configured for SSL offloading and are having SSL certificate issues, see our troubleshooting section.

Configuring a Load Balancer for SSL passthrough

Passthrough is the simplest way to handle encrypted traffic on a Load Balancer. As the name suggests, traffic is simply passed through the Load Balancer without being decrypted on it. Whilst this option generates very low overhead, no layer 7 actions can be carried out. This means that no cookie-based sticky sessions are possible with this method. In addition, if an application does not share sessions between servers, users’ sessions may get lost by being redirected to different servers of the group.

To configure your Load Balancer for SSL passthrough:

- The frontend does not need a certificate and can listen on any port.

- The frontend must be linked to a backend which uses TCP protocol, and the TLS toggle should be disabled in the backend configuration.

- The backend server must listen with its HTTP server process on the same port as configured for the backend.

- The backend server must have its own certificate.