Serverless Functions FAQ

Overview

What is serverless computing, and how does it differ from traditional cloud hosting?

Serverless computing is a cloud execution model where the cloud provider dynamically manages the allocation of compute resources. Unlike traditional hosting models, you do not need to provision, scale, or maintain servers. Instead, you focus solely on writing and deploying your code, and the infrastructure scales automatically to meet demand.

Why consider using Serverless Containers, Functions, or Jobs for my projects?

These services allow you to build highly scalable, event-driven, and pay-as-you-go solutions. Serverless Containers and Functions help you create applications and microservices without worrying about server management, while Serverless Jobs lets you run large-scale, parallel batch-processing tasks efficiently. This can lead to faster development cycles, reduced operational overhead, and cost savings.

What are the cost benefits of using serverless services like Serverless Containers and Serverless Functions?

With serverless, you only pay for the computing resources you use. There are no upfront provisioning costs or paying for idle capacity. When your application traffic is low, the cost scales down, and when traffic spikes, the platform automatically scales up, ensuring you never overpay for unused resources.

How do Serverless Functions namespaces and Container Registry namespaces interact?

Serverless Functions namespaces and Container Registry namespaces observe the following behaviors:

-

Creating a Serverless Functions namespace implicitly creates an empty Container Registry namespace. When a Serverless Function is deployed, the built image will be stored in the Container Registry namespace created.

-

Creating a Container Registry namespace does not create a Serverless Functions namespace.

-

Deploying a function in a Serverless Functions namespace creates an image in the corresponding Container Registry namespace.

-

If you delete the Container Registry namespace associated with a Serverless Functions namespace, it will be created again when deploying a function within this Serverless Functions namespace.

How does scaling work in these serverless services?

Scaling in Serverless Containers and Serverless Functions is handled automatically by the platform. When demand increases - more requests or events - the platform creates additional instances to handle the load. When demand decreases, instances that are not used anymore are removed. This ensures optimal performance without manual intervention.

How to migrate from Serverless Functions to Serverless Containers?

Serverless Functions offers several runtimes and versions, in some cases more control is required or specific dependencies needs some runtime tuning, for these cases please read How to migrate from Functions to Containers.

Offering and availability

What runtimes are available on Serverless Functions?

Serverless Functions enables you to deploy functions using popular languages: Go, Node, Python, PHP, and Rust.

Refer to our dedicated page about Serverless Functions Runtimes Lifecycle.

Which protocols are supported by Serverless Functions?

Serverless Functions support HTTP/1.1 and HTTP/2. Other protocols are not supported. Refer to the dedicated troubleshooting page for more information.

Specifications

How to select the right resources (vCPU/RAM) for Serverless Functions?

Insufficient vCPU and RAM resources can lead to functions going to error status. Make sure to provision enough resources for your function.

We recommend you set high values, use metrics to monitor the resource usage of your function, then adjust the value accordingly.

Pricing and billing

How am I billed for Serverless Functions?

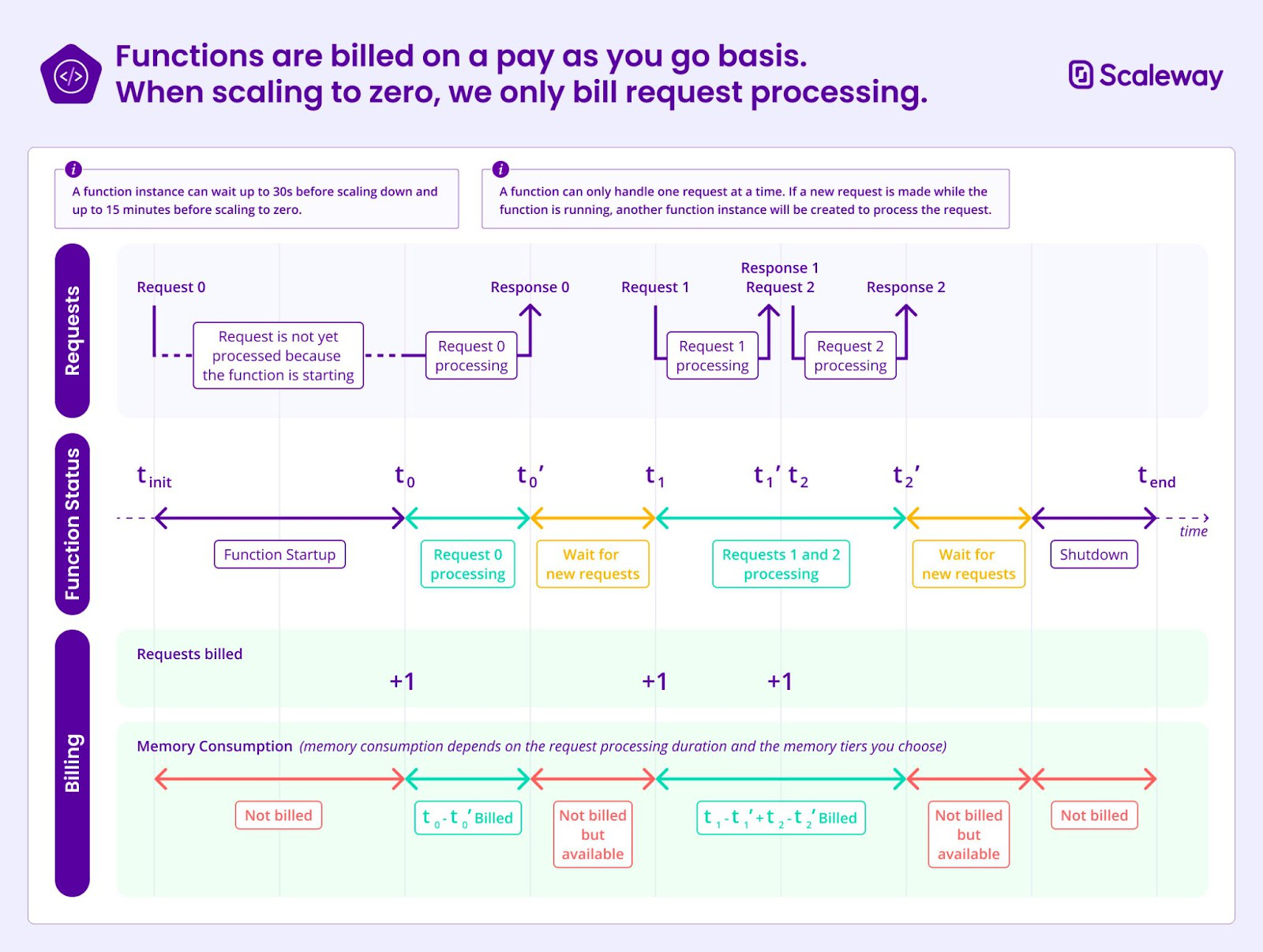

Principle

Serverless Functions is billed on a pay-as-you-go basis. Three components are taken into account:

-

Monthly request number: each time your function is invoked, we increase a counter.

-

Resource consumption: this component is obtained by multiplying the memory tiers chosen by the duration of each function invocation.

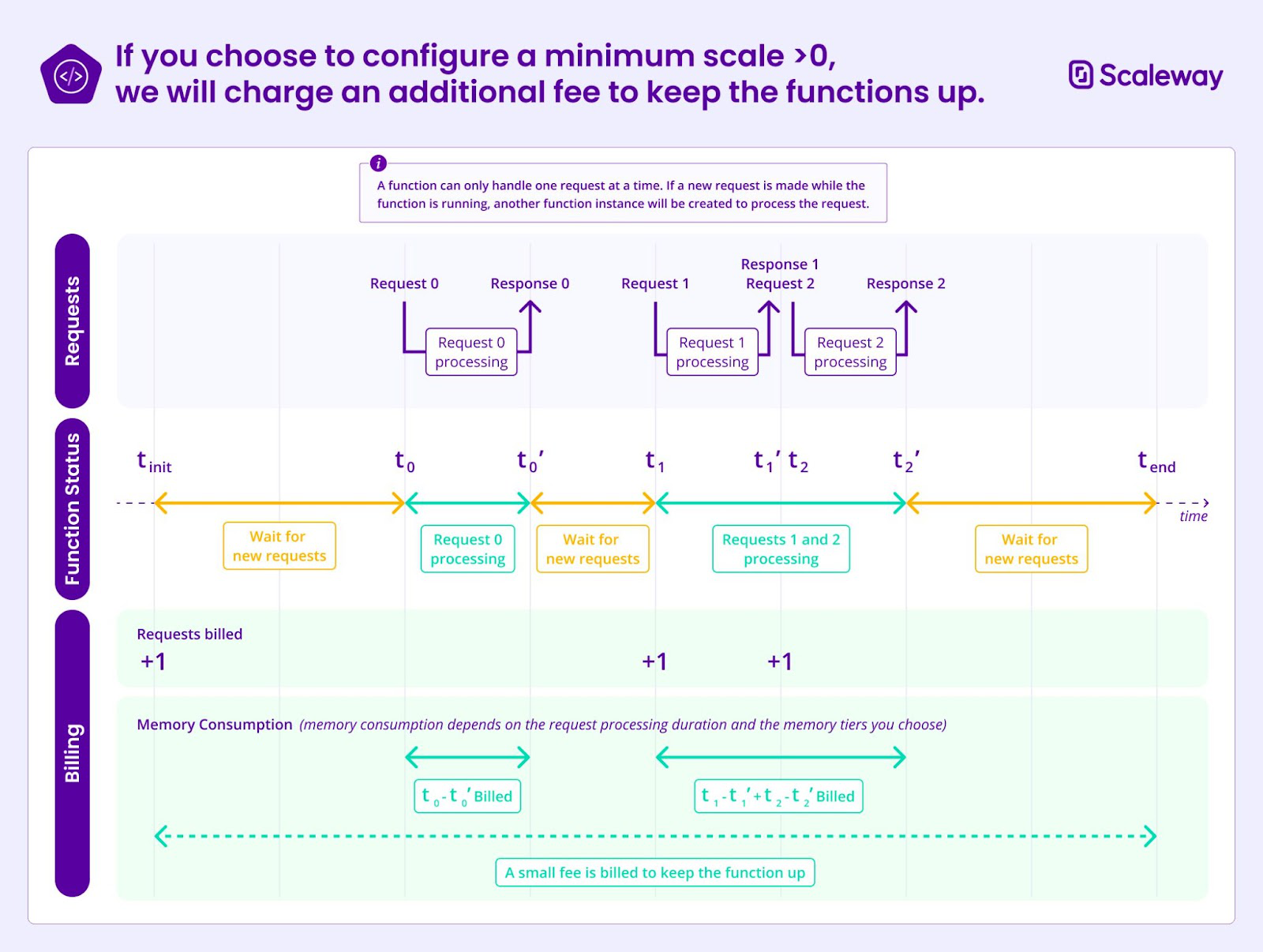

-

Resources provision: in order to mitigate cold start, users can choose to keep some function instances warm (by filing the minimum scale value). We then charge for the provisioned resources similarly to the Resources consumption component.

-

Ingress/Egress: Is free of charge.

The scheme below illustrates our billing model:

Pricing

-

Monthly requests: €0.15 per million requests, and we offer 1M free requests per account per month.

-

Resources consumption: €1.20 per 100 000 GB-s, and we provide 400 000 GB-s free tiers per account and per month.

| Memory provisioned | Cost per second |

|---|---|

| 128 MB | €0.0000015 |

| 256 MB | €0.0000030 |

| 512 MB | €0.0000060 |

| 1024 MB | €0.0000120 |

| 2048 MB | €0.0000240 |

| 3072 MB | €0.0000360 |

| 4096 MB | €0.0000480 |

- Resources provision: €0.36 per 100 000 GB-s

| Memory provisioned | Cost per second |

|---|---|

| 128 MB | €0.00000045 |

| 256 MB | €0.0000009 |

| 512 MB | €0.0000018 |

| 1024 MB | €0.0000036 |

| 2048 MB | €0.0000072 |

| 3072 MB | €0.0000108 |

| 4096 MB | €0.0000144 |

Examples

Example 1: Without resources provisioning

| Criteria | Value |

|---|---|

| Number of requests | 30 000 000 |

| Average request duration | 1 s |

| Allocated resources (memory) | 128 MB |

| Free tier | Yes |

| Provision/minimum instances | 0 |

-

Resources consumption

- Service usage duration: 30 000 000 requests x 1 s = 30 000 000 seconds used

- Memory conversion: 128 MB = 0.125 GB

- Resources consumed: 30 000 000 s x 0.125 GB = 3 750 000 GB-s

- Free tier: 400 000 GB-s

- Resourced billed: 3 750 000 - 400 000 = 3 350 000 GB-s

- Cost: 3 350 000 x €0.0000120 = €40.20

-

Requests:

- Free tier: 1 000 000 requests

- Billed requests: 30 000 000 - 1 000 000 = 29 000 000 requests

- Cost: 29 000 000 x €0.00000015 = €4.35

Total monthly cost: €44.55

Example 2: With resources provisioning

| Criteria | Value |

|---|---|

| Number of requests | 30 000 000 |

| Average request duration | 1 s |

| Allocated resources (memory) | 128 MB |

| Free tier | Yes |

| Provision/minimum instances | 1 |

-

Resources consumption

- Service usage duration: 30 000 000 Requests x 1 s = 30 000 000 seconds used

- Memory conversion: 128 MB = 0.125 GB

- Resources consumed: 30 000 000 s x 0.125 GB = 3 750 000 GB-s

- Free tier: 400 000 GB-s

- Resourced billed: 3 750 000 - 400 000 = 3 350 000 GB-s

- Cost: 3 350 000 x €0.0000120 = €40.20

-

Provisioned functions consumption:

- Provisioned duration: 1 month = 2 592 000 seconds, with one minimum instance → 2 592 000 seconds used

- Provisioned resources consumed: 2 592 000 x 0.125 GB = 324 000 GB-s

- Cost: 324 000 x €0.0000036 = €1.17

-

Requests:

- Free tier: 1 000 000 requests

- Billed requests: 30 000 000 - 1 000 000 = 29 000 000 requests

- Cost: 29 000 000 x €0.00000015 = €4.35

Total monthly cost: €45.72

Quotas and limitations

What are the limitations of Serverless Functions?

Refer to our dedicated page about Serverless Functions limitations and configuration restrictions for more information.

Compatibility and integration

How do I integrate my serverless solutions with other Scaleway services?

Integration is straightforward. Serverless Functions and Containers can be triggered by events from Queues and Topics and Events, and can easily communicate with services like Managed Databases or Serverless databases. Serverless Jobs can pull data from Object Storage, or output processed results into a database. With managed connectors, APIs, and built-in integrations, linking to the broader Scaleway ecosystem is seamless.

Can I use Serverless Functions with Edge Services?

You cannot use Serverless Functions with Edge Services because there are no native integrations between the two products yet.

Can I use the IP address of a Serverless Function?

By design, it is not possible to guarantee static IPs on Serverless Compute resources.

Usage and management

How can I deploy my functions?

There are several ways to deploy Serverless Functions, to accommodate a broad range of use cases.

Can I upgrade Serverless Function resources (vCPU and RAM) at any time?

Yes, Serverless Functions resources can be changed at any time without causing downtime. Refer to the next question for full details.

Does updating a Serverless Function cause downtime?

No, deploying a new version of your Serverless Function generates a rolling update. This means that a new version of the service is gradually rolled out to your users without downtime. Here is how it works:

- When a new version of your function is deployed, the platform automatically starts routing traffic to the new version incrementally, while still serving requests from the old version until the new one is fully deployed.

- Once the new version is successfully running, we gradually shift all traffic to it, ensuring zero downtime.

- The old version is decommissioned once the new version is fully serving traffic.

This process ensures a seamless update experience, minimizing user disruption during deployments. If needed, you can also manage traffic splitting between versions during the update process, allowing you to test new versions with a subset of traffic before fully migrating to them.

How can I test my functions locally?

Scaleway provides libraries to run your functions locally, for debugging, profiling, and testing purposes. Refer to the dedicated documentation for more information.

Where can I find some advanced code examples for functions?

Check out our serverless-examples repository for real world projects.

How to migrate runtimes?

On a Serverless Function, you can change the runtime if the new runtime is from the same family as the old one. Example: migrate from go1.23 to go1.24. To change programming language, you must create a new Serverless Function.

See the functions runtimes documentation for more information about runtimes.

How can I call my functions periodically?

Scaleway Serverless Functions natively support CRON triggers to call your functions periodically. This feature has many applications, such as scheduled data processing, maintenance tasks, monitoring, or reporting.

Periodic CRON triggers also allow you to maintain your functions active during specific time slots to reduce cold start latency, without having to provision a minimum of 1 vCPU at all times.

Refer to the dedicated documentation for more information on how to create CRON triggers for your functions.

To learn more about how CRONs work, refer to our CRON schedule reference documentation.

How can I configure access to a Private Network or Virtual Private Cloud (VPC)?

Scaleway Serverless Functions support Virtual Private Cloud (VPC) and can be attached to a Private Network. Refer to the dedicated documentation for more information.

How can I attach Block Storage to a Serverless Function?

Scaleway Serverless Functions do not currently support attaching Block Storage. These functions are designed to be stateless, meaning they do not retain data between invocations. For persistent storage, we recommend using external solutions like Scaleway Object Storage.

How can I store data in my Serverless resource?

Serverless resources are by default stateless, local storage is ephemeral.

For some use cases, such as saving analysis results, exporting data etc., it can be important to save data. Serverless resources can be connected to other resources from the Scaleway ecosystem for this purpose:

Databases

- Serverless Databases: Go full serverless and take the complexity out of PostgreSQL database operations.

- Managed MySQL / PostgreSQL: Ensure scalability of your infrastructure and storage with our new generation of Managed Databases designed to scale on-demand according to your needs.

- Managed Database for Redis®: Fully managed Redis® in seconds.

- Managed MongoDB®: Get the best of MongoDB® and Scaleway in one database.

Storage

- Object Storage: Multi-AZ resilient object storage service ensuring high availability for your data.

- Scaleway Glacier: Our outstanding Cold Storage class to secure long-term object storage. Ideal for deep archived data.

Can I whitelist the IPs of my Serverless Functions?

Due to the nature of serverless computing, Serverless Functions do not have dedicated or predictable IP addresses assigned to them. This means you cannot whitelist specific IPs for your Serverless Functions.

Instead, you need to allow all Scaleway IP address prefixes in your external services or firewalls. You can find the complete list of Scaleway IP prefixes at: Scaleway Peering Policies

Additionally, Scaleway Serverless Functions supports Virtual Private Cloud (VPC) and can be attached to a Private Network, which allows you to securely connect your resources in an isolated environment.

Security

More generally, what measures has Scaleway put in place to secure this service and ensure its availability?

As mentioned above, the primary security measure we implement is strong isolation between customer functions.

Regarding availability, there is always at least one team member responsible for the proper operation of the infrastructure from Monday to Friday, 9:00 AM to 6:30 PM. We also have trained SREs on call for part of the product’s infrastructure.

From a security standpoint, this service provides the ability to:

- Isolate functions from one another

- Protect private resources (IAM / JWT)

- Connect to a private network, if configured

- Securely store environment variables and secrets

- Secure request routing

- Provide logs and metrics to help customers diagnose issues

Are data stored on ephemeral storage encrypted at rest?

At the moment, we do not provide encryption at rest on the filesystem of customer functions. However, the underlying volumes comply with our security standards. For more details, please refer to our Security and resilience documentation.

Within customer functions:

- In sandbox v2, everything under

/tmpis stored in RAM. - Everything else is written to disk, but access is restricted to authorized personnel only. Please refer to the question Who are the authorized users?

Is there any antimalware or antivirus system in place for functions to secure the environment?

We do not use any antimalware or antivirus system to secure customer environments. In the future, the Scaleway registry will include an image vulnerability scanning system.

The environment is secured by the fact that each customer function runs in an isolated system. For isolation, we use Kata Containers and gVisor, corresponding to sandbox v1 and sandbox v2 respectively, which allow us to strongly isolate customer functions.

As a result, application security remains the responsibility of the customer.

Who are the authorized users (internal to Scaleway and possibly external) who can access applications running on serverless functions?

The only users with infrastructure-level access to customer functions are developers working within the team or on the team’s underlying infrastructure. Even though such access may be possible for debugging purposes, it will never be performed without the customer’s explicit authorization.

Support and troubleshooting

How to reduce cold-start of Serverless Functions?

-

Optimize the startup: Cold-start can be affected by a loading a large number of dependencies and opening lot of resources at startup. Ensure that your code avoids heavy computations or long-running initialization at startup and optimize the number of loaded libraries.

-

Keep your function warm: You can use CRON triggers at certain intervals to keep your function warm or set the min-scale parameter to one when required.

-

Increase resources: Adding more vCPU and RAM can help to significantly reduce the cold-starts of your function.

-

Use sandbox v2: We recommend using sandbox v2 (advanced settings) to reduce cold start.

How can I check build errors?

Some Serverless runtimes (ex: Go, Rust) will compile your code in order to make your function executable.

Compilation can fail if errors are present in the code, for example syntax errors and missing libraries.

Build errors are sent to the Observability platform on Scaleway Cockpit.

In the Serverless Functions Logs dashboard, you will then be able to read information about your log build outputs, if errors occurred during compilation.

Why does my function have an instance running after deployment, even with min-scale 0?

Currently, a new function instance will always start after each deployment, even if there is no traffic and the minimum scale is set to 0. This behavior is not configurable at this time.